Andrej Karpathy: "There's some over-prediction going on in the industry..."

Dwarkesh Patel: "What do you think will take a decade to accomplish? What are the bottlenecks?"

Andrej Karpathy: "Actually making it work."

6 months ago, in April 2025, Dwarkesh announced the AI 2027 project on his podcast, interviewing authors Daniel Kokatajlo and Scott Alexander. Now, Karpathy justified his much longer timelines to Dwarkesh, on what's holding back coding agents, the first step in the AI 2027 timeline:

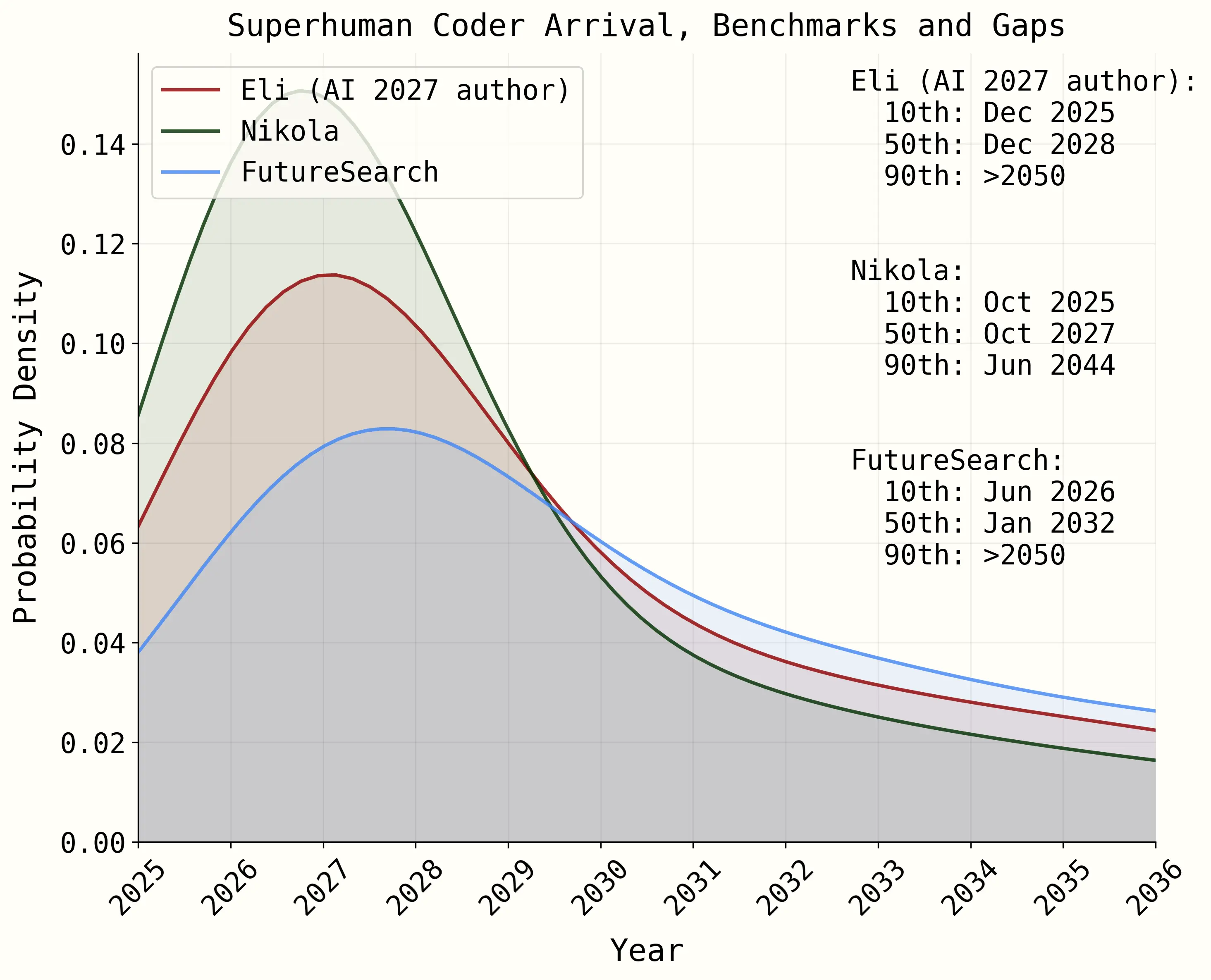

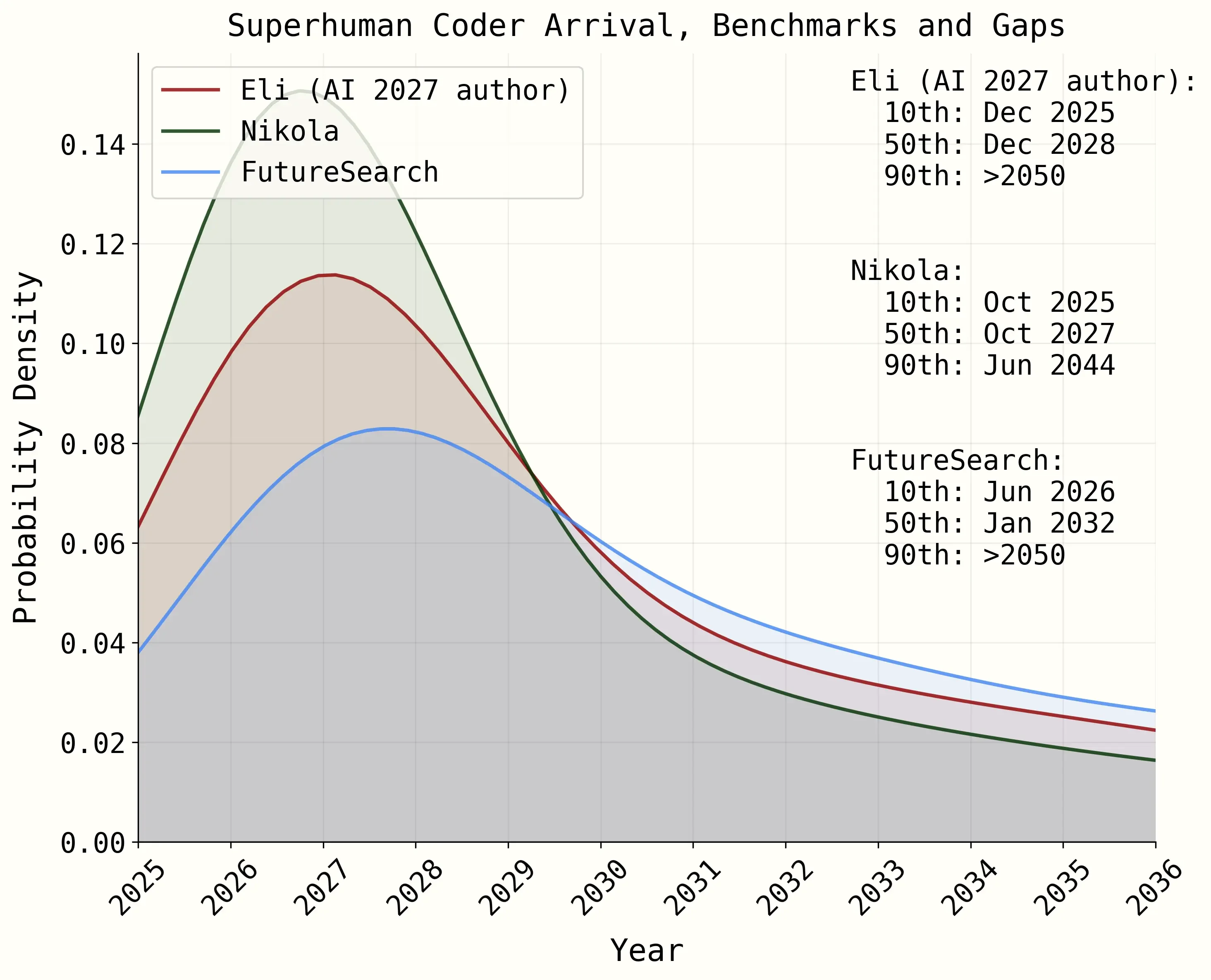

FutureSearch co-authored the AI 2027 timeline forecast. We predicted that Superhuman Coders would take about 3x longer than the other AI Futures forecasters predicted.

The Not-So-Fast Thesis

For AI experts, Karpathy's view is a better counterargument to short timelines than ours. But for non-AI-experts, we think the practical considerations we raised are worth reflecting on with 6 more months of evidence. As forecasters, this is more of an "outside view" - regardless of how exactly AI improves, what problems might slow down an R&D-based takeoff scenario?

One key point was: "Commercial Success May Trump the Race to AGI". We wrote:

So far OpenAI, the leading contender to be the company in the AI 2027 story, has spoken more about consumer revenue growth and less about transformative AI.

This piece requires at least one frontier lab to dedicate the majority of their resources towards building AI for their own internal use. We have reason to doubt that many of them will.

An AI takeoff as soon as 2027, in the scenario, depends on a stupendous capital investment in running a vast number of expensive AI agents to do AI research inside the companies. So are they actually preparing for this, and trying it?

Are AI Companies Focusing on R&D Speedups?

So, since April 2025, what have we learned about frontier labs investing their AI into superhuman coding to accelerate their internal rate of R&D? Here is a quick assessment:

- Anthropic: Heavy focus on R&D speedup via coding agents, notably Claude Code being used extensively internally.

- OpenAI: Moved strongly towards consumer, e.g. with the Sora app, shopping features. Did build Codex, seemingly to compete with Claude Code.

- Google Deep Mind: No change, similar emphasis on fundamental research, always invested heavily in developer productivity.

- xAI: Focusing on Grok for the X algorithm, sexy companions. Grok 4 is not a top tier coding model and likely not speeding up their R&D at all.

- Meta: Sexy companions, ads, and Zuckerberg clearly talking like "superintelligence" is a feature set for consumers, not a Shoggoth.

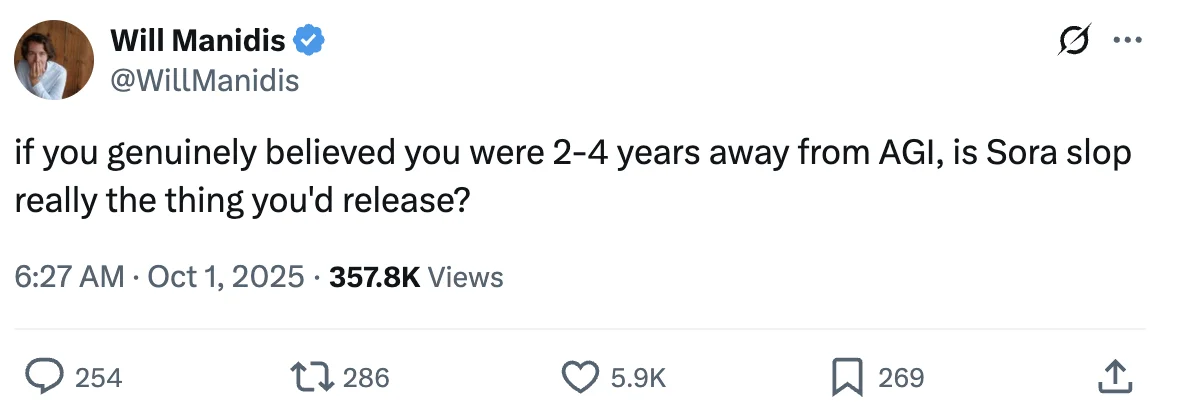

As many have pointed out:

Will Manidis,

X

Most importantly: if Anthropic is in fact the one frontier lab focused heavily on internal R&D speedup, then the fact that they are also the most safety-conscious with their Responsible Scaling Policy, and likely to intervene or slow down at certain risk levels, to me significantly reduces the chance of an AI 2027 like scenario with them as the "OpenBrain".

And if Google turns out to be "OpenBrain", they are so large, so slow moving, and so regulated, that it seems unlikely they could drive anything like the AI 2027 scenario.

So we take this as (light) evidence in favor of our original view that an R&D-based AI takeoff will take much longer than the AI 2027 scenario.

What about the other AI 2027 forecasters? How have they updated since then?

The AI Futures Timeline Updates

Here is how we interpret the other AI Futures authors updated timelines.

Two key notes: First, these sources are cherrypicked from many great writings from these folks where they engage substantially on the details of the arguments. Second, it's not completely clear what outcome the AI 2027 forecasters in these quotes are referring to entire takeoff scenario. AGI has multiple definitions, and the specific AI 2027 scenario of course won't play out exactly that way. So keep in mind these quotes are not specifically about the arrival of superhuman coding.

Daniel Kokotajlo - Since AI 2027: Median +1 year, to 2029

"When AI 2027 was published my median was 2028, now it's slipped to 2029 as a result of improved timelines models & slightly slower than expected progress in general"

Eli Lifland - Since AI 2027: Median ~2032 (giving 15-20% to AGI by 2027)

"My median is roughly 2032, but with AGI by 2027 as a serious possibility (~15-20%)."

Nikola Jurkovic - Since AI 2027, Updated from ~3 to ~4 year median

"This has been one of the most important results for my personal timelines to date. It was a big part of the reason why I recently updated from ~3 year median to ~4 year median to AI that can automate >95% of remote jobs from 2022"

We also take this as (light) evidence that the difference in our forecasts in April 2025 were directionally correct. Of course, if a world-transforming AI takeoff happens in 2029 or 2032 as these forecasters think, they were on net closer to the truth than we were. The beauty of public forecasting is tracking the change over time, not so much the pure accuracy on one forecast.

I (Dan Schwarz) personally really appreciate Karpathy's not-doomer, not-skeptic middleground take:

"my AI timelines are about 5-10X pessimistic w.r.t. what you'll find in your neighborhood SF AI house party or on your twitter timeline, but still quite optimistic w.r.t. a rising tide of AI deniers and skeptics."

—Andrej Karpathy (X, October 18, 2025)

Personally, I think I'm closer to the SF house party timeline than Karpathy (and than the FutureSearch median forecast). I suppose we'll check in once more, 6 months from now, and see!