Key Takeaways

- FutureSearch correctly predicted in June 2024 that Siri would not switch to a large OpenAI model anytime soon, days before Apple's WWDC announcement

- By March 2025, Bloomberg confirmed a "fully conversational Siri" won't arrive until at least 2027, validating our forecast

- Our key insight was modeling the base rates for such tech partnerships, and such rumors being true

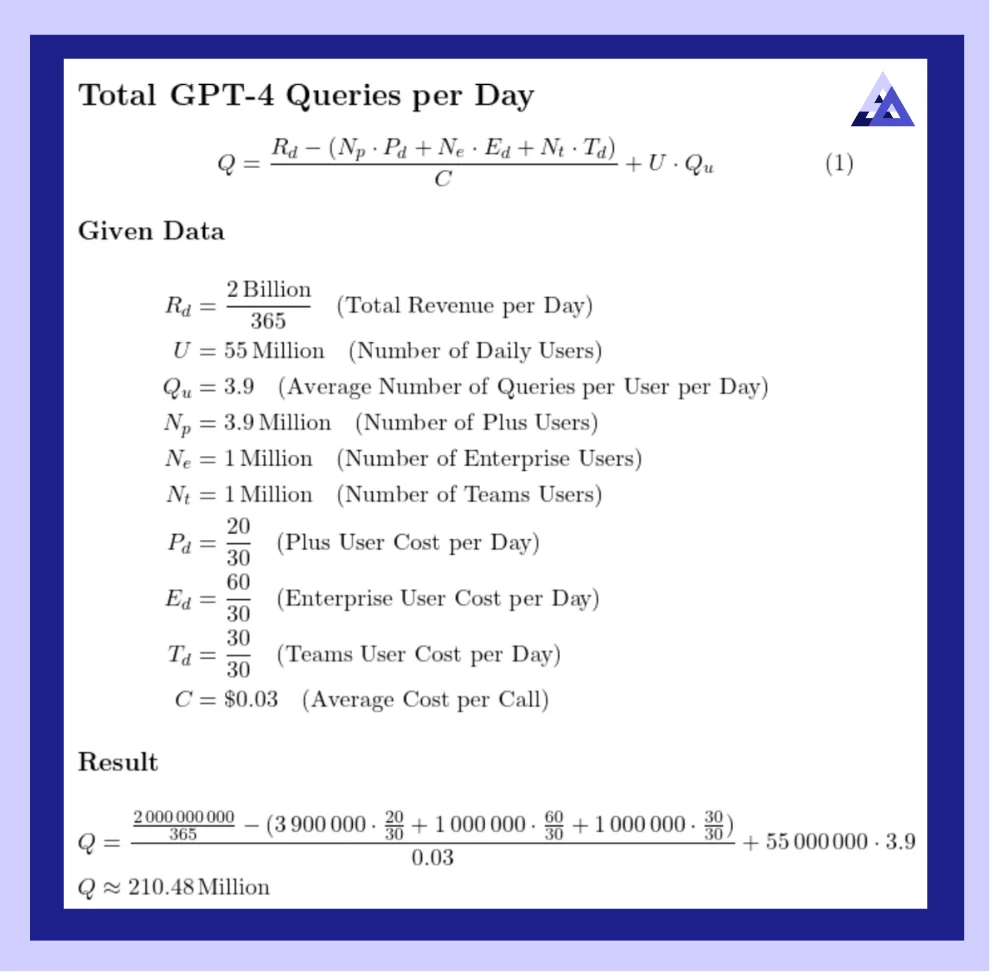

- We also modeled Siri's daily queries (~830M) vs ChatGPT's (~210M) as of Spring 2024, showing backend capacity was a major constraint

What Happened

Last year—June 10, 2024—Apple and OpenAI announced at Apple's WWDC keynote that they were adding ChatGPT intelligence to Siri.

Days earlier, at Manifest in Berkeley, FutureSearch publicly predicted Siri would not switch to using a large OpenAI model anytime soon.

By March 2025, Bloomberg journalist Mark Gurman reported that a "fully conversational Siri" won't be available until at least 2027, confirming our forecast was correct.

How did we predict this? We used two complementary forecasting approaches: the Outside View (historical trends and base rates) and the Inside View (technical constraints and capabilities).

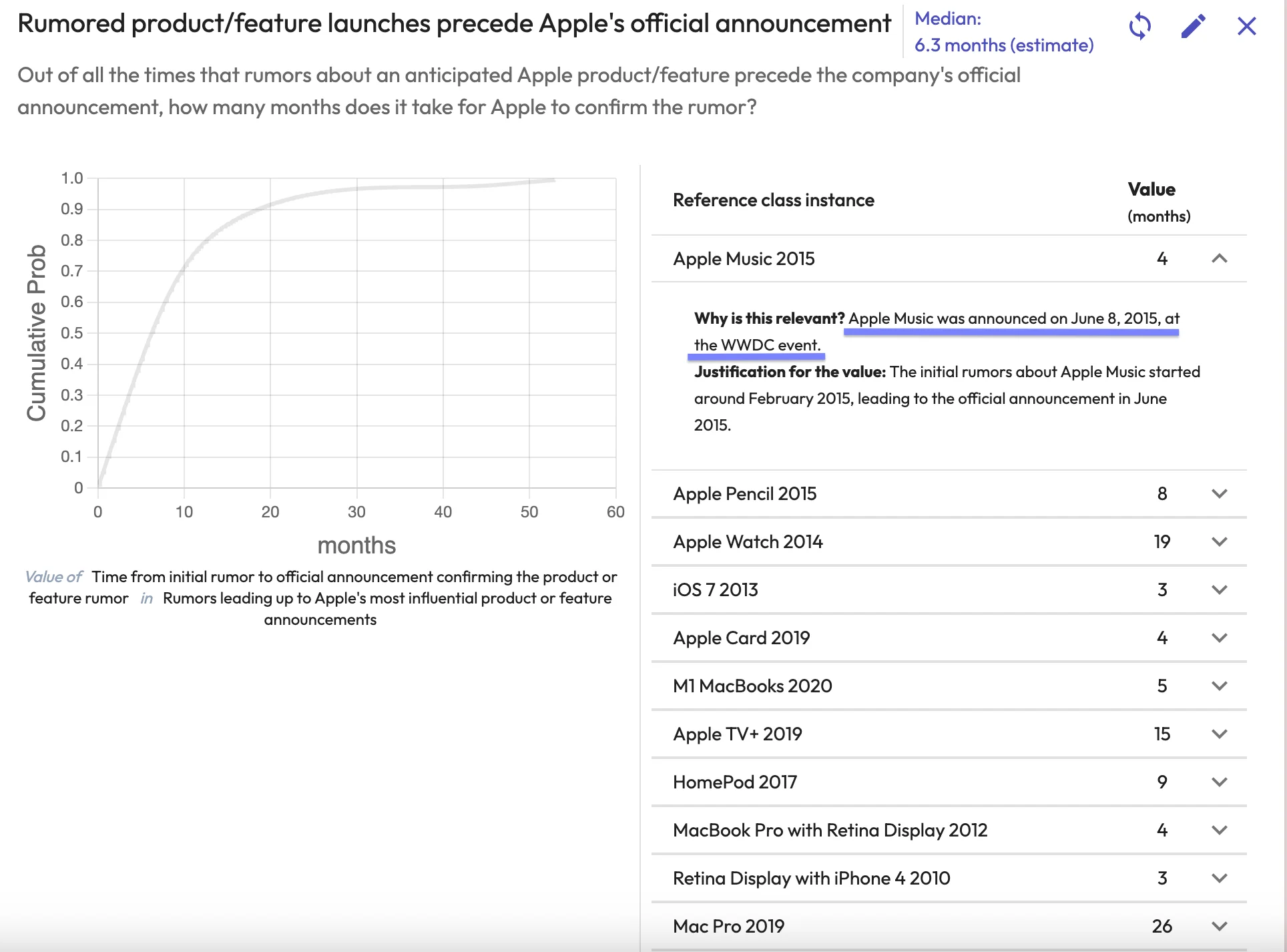

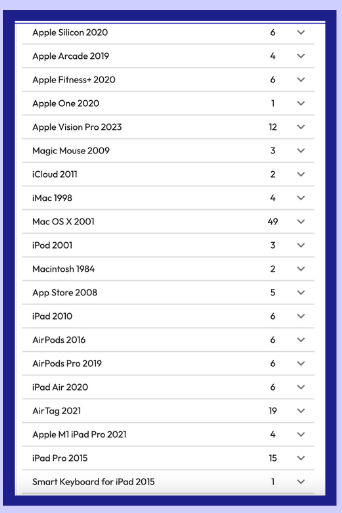

Outside View: Historical Base Rates Suggested Low Probability

The Outside View analyzes historical trends and base rates to estimate probabilities. We examined several key questions:

How Often Does Apple Depend on External Partners?

We analyzed Apple's history of partnerships for core features. Historical data showed only 47% likelihood that Apple would depend on external partners for a key technology like Siri intelligence.

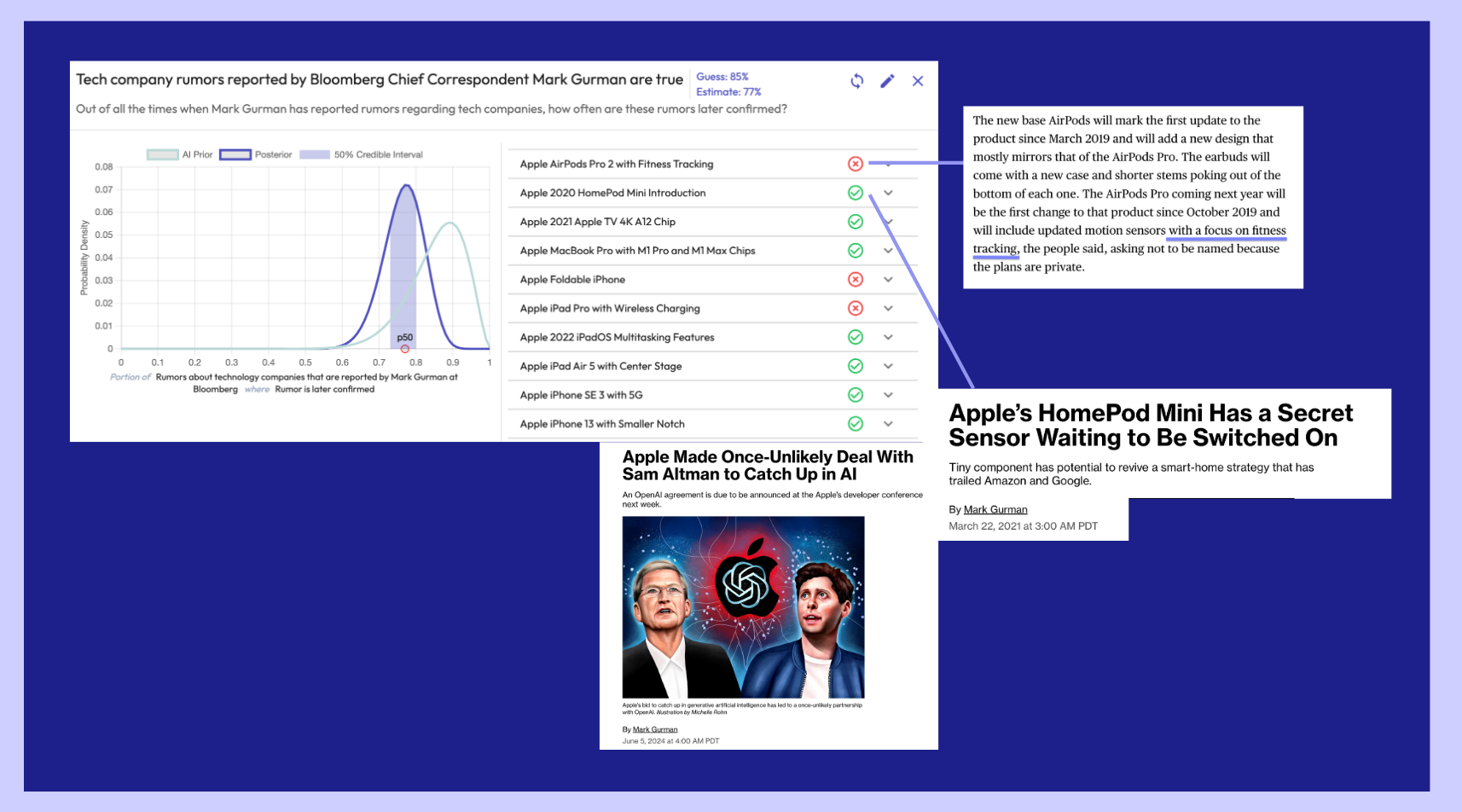

How Accurate Are Tech Partnership Rumors?

We evaluated the base rate accuracy of tech partnership rumors, finding that rumors of tech partnerships are true about 51% of the time.

What About Bloomberg and Mark Gurman Specifically?

Bloomberg's Mark Gurman is notably reliable for Apple rumors. Our analysis showed 77-80% accuracy for Gurman's Apple reporting.

Combining these base rates suggested a low probability of Apple fully integrating ChatGPT into Siri.

Inside View: Technical Constraints Made Full Integration Unlikely

The Inside View examines specific technical factors and constraints. We focused on two critical questions:

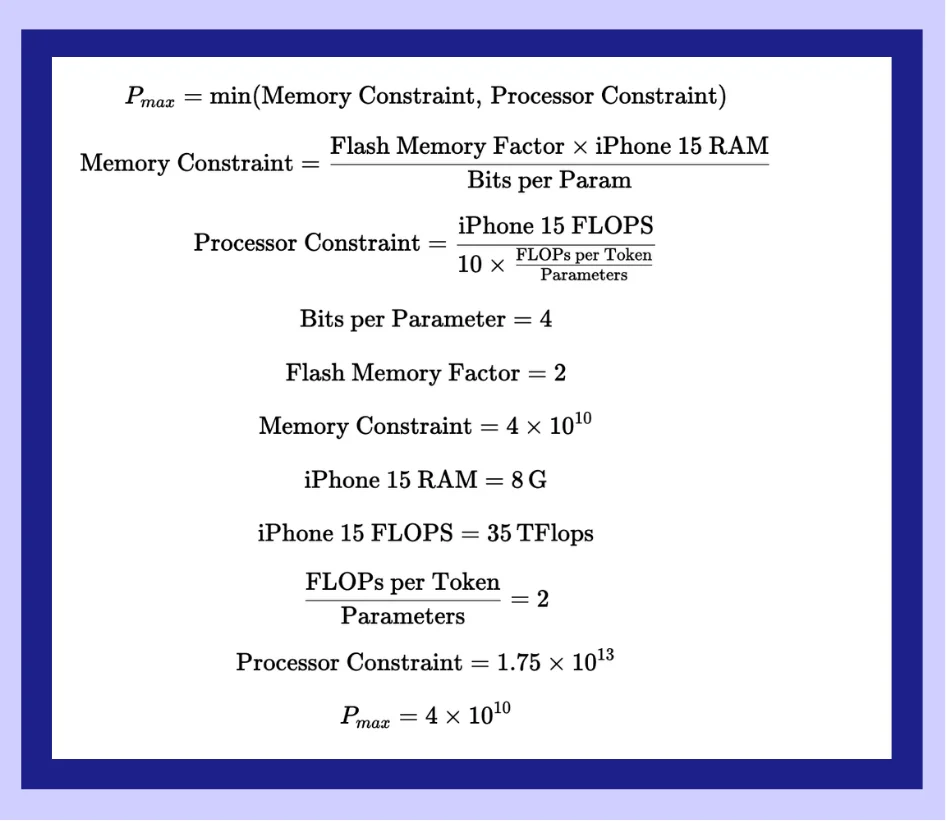

1. How Good Will Apple's On-Device LLM Be?

We estimated that Apple could potentially fit a 40B-parameter model on an iPhone, which would be sufficient for many Siri tasks and reduce dependency on external models.

This capability meant Apple didn't necessarily need ChatGPT for basic intelligence improvements.

2. What Percentage of Siri Calls Could OpenAI's Backend Handle?

The query volume analysis revealed a massive scaling challenge:

- Siri handles ~830 million daily queries

- ChatGPT handles ~210 million daily queries

OpenAI's infrastructure would need to roughly quadruple to handle Siri's full query load, making a complete transition highly impractical in the near term.

Privacy and Control Concerns

Beyond capacity, routing all Siri queries through OpenAI's servers would conflict with Apple's privacy-first philosophy. Apple has consistently emphasized on-device processing and data minimization.

Three Possible Integration Scenarios

Given these constraints, we identified three plausible scenarios for the Apple-OpenAI partnership:

Scenario 1: Minimal Integration ("Siri by OpenAI" Label)

Apple could add an "OpenAI" or "ChatGPT" branding to Siri with minimal actual changes to the underlying system. This would capture marketing benefits while avoiding technical challenges.

Scenario 2: Optional GPT-4 Powered Siri

Apple could offer GPT-4 powered Siri as an opt-in feature or paid subscription, limiting the query volume to manageable levels while maintaining standard Siri for most users.

Scenario 3: On-Premise ChatGPT in Apple Datacenters

OpenAI could provide ChatGPT model weights for Apple to run in their own datacenters, preserving privacy and control while leveraging OpenAI's technology.

Reality appears closest to Scenario 2, with limited ChatGPT integration available as an option rather than a core Siri replacement.

The Bottom Line: Apple's Different AI Strategy

Apple's approach to AI integration reveals a fundamentally different strategy from competitors:

Privacy over power: Apple prioritizes user privacy and on-device processing over having the most capable AI assistant.

Measured integration: Rather than wholesale adoption of external AI, Apple is taking a gradual, controlled approach.

Long-term investment: Apple is building its own AI capabilities rather than becoming dependent on external providers.

As the Bloomberg report confirmed, a fully conversational Siri powered by advanced AI won't arrive until 2027 at the earliest. This validates our forecast and demonstrates that Apple is playing a longer game focused on sustainable, privacy-preserving AI integration.

Forecasting Methodology: Combining Views

Our accurate prediction demonstrates the value of combining Outside View and Inside View forecasting:

- Outside View provides historical context and base rates that prevent overconfidence

- Inside View examines specific technical and business constraints

- Together, they produce more reliable forecasts than either approach alone

This methodology allowed us to look beyond the hype of the Apple-OpenAI announcement and accurately predict the partnership's limited scope.