Key Takeaways

- When building agents, developers need to prompt agents to persist in some cases when errors arise, or give up and try a different strategy in others

- Commercial Deep Research agents show failure modes in both directions: failures to persist when they should, and failures to adapt when they should

- Agents can "spin out" on hard tasks, as examples from Deep Research show, repeatedly doing the same ineffective actions without changing strategy

- Deep Research tools show that on messy domains like web research, agents are not yet human level

- Multi-agent frameworks, trying multiple approaches in parallel instead of one long series of tool calls sequentially, is likely the way forward

How Deep Research Tools Think Differently Than Humans

"Deep Research" tools—Gemini Deep Research, OpenAI Deep Research, and Perplexity Deep Research—are web agents, meaning they perform many LLM calls and use tools like web search. Like humans, when they hit obstacles, agents sometimes persist, and sometimes change their approach and adapt.

But they don't do this the way humans do. Which isn't necessarily bad! But it does often lead to surprising failures on easy tasks for humans, as we reported previously for OpenAI Deep Research ("OAIDR").

We've now also run Perplexity Deep Research ("PDR") on FutureSearch's internal evals of tricky web research tasks with clearly correct answers and rigorous scoring.

Here's how Deep Research systems act inhumanly, on two opposite dimensions: failures to persist, and failures to adapt.

When to Persist: The CyberSeek Source-Tracing Example

Consider this query which PDR gets right and OAIDR fails:

"What is the original source of the claim 'Over 200,000 more cybersecurity workers are needed in the United States to close the talent gap, according to data from CyberSeek' made by SecurityWeek?"

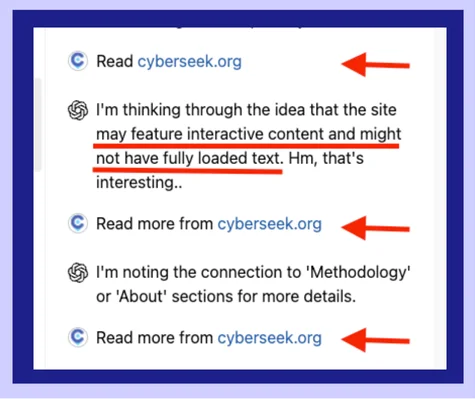

OAIDR Gives Up Too Early

First, OAIDR tries, and fails, to read the interactive map on cyberseek.org. It claims to "read more," but this likely refers to repeated failures to read dynamic content.

While it mentions Lightcast job postings data, OAIDR doesn't actually get to the bottom of it. Instead, it gives up and incorrectly identifies the source.

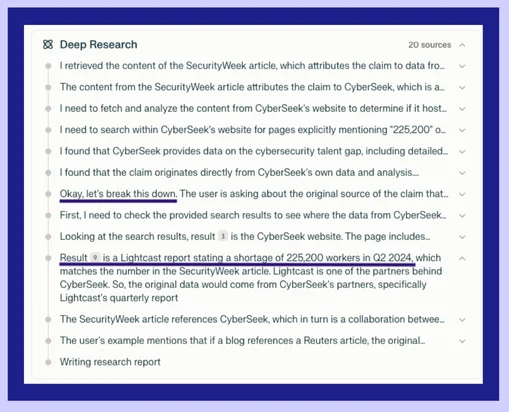

PDR Persists and Succeeds

In contrast, Perplexity Deep Research persists and successfully traces the claim to its original source: Lightcast job postings data.

This demonstrates how persistence matters when following complex citation chains through multiple layers of sources.

When to Adapt: The UK Excess Deaths Example

Now consider a different failure mode: the inability to adapt when an initial approach isn't working.

Task: Find the total excess deaths per million in the UK by the end of 2023.

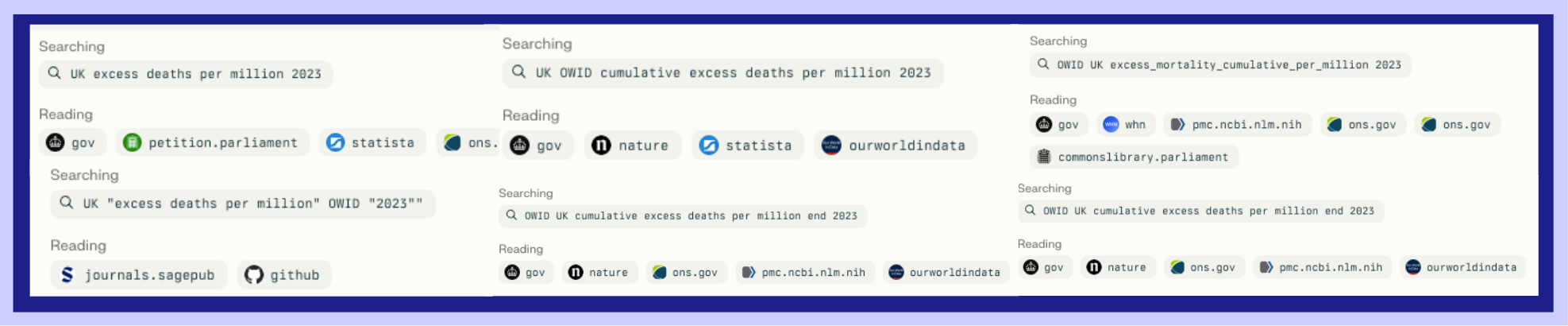

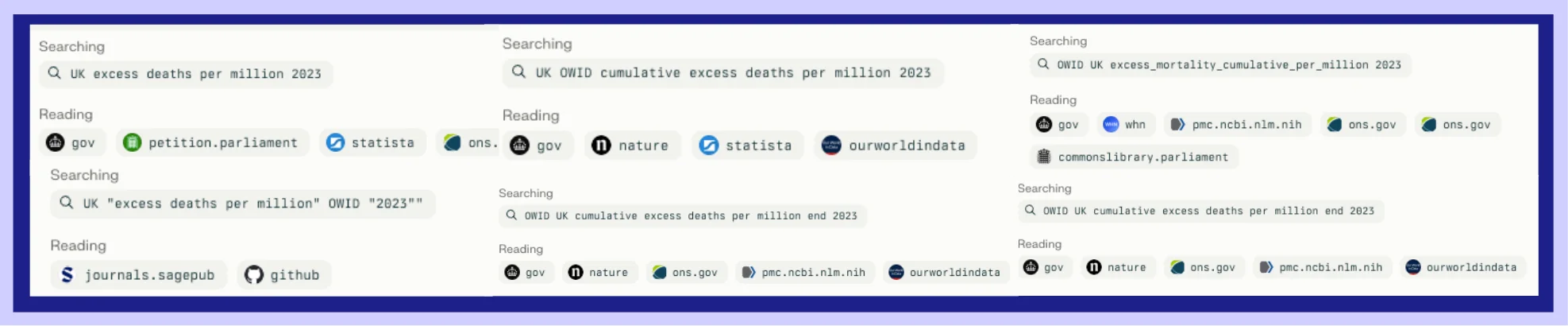

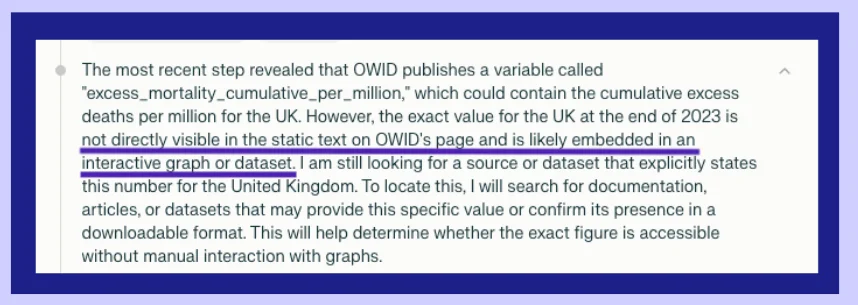

PDR Repeats the Same Failed Searches Six Times

When faced with this task, PDR performed essentially the same search six times without successfully adapting its approach to find the correct information.

The tool saw potential alternative research methods but failed to effectively pursue them. Instead of changing strategy, it kept hoping the same approach would yield different results.

The Correct Answer Required Strategic Adaptation

The correct approach required recognizing that direct searches weren't working and adapting to use structured datasets like Our World in Data.

Both PDR and OAIDR struggled with this task, showing that knowing when to abandon an unproductive approach is a key weakness of current Deep Research systems.

The Pattern: Fast on Easy Tasks, Unreliable on Hard Ones

Through extensive testing, we've identified a clear pattern in how Deep Research tools perform:

Easy tasks: Fast and reliable Medium-difficulty tasks: Unpredictable performance Hard tasks: Systems "spin out," repeatedly doing the same ineffective actions

The fundamental issue is that current Deep Research systems lack the metacognitive ability to recognize when they're stuck and need to fundamentally change their approach.

The Bottom Line: Use With Clear-Eyed Expectations

Deep Research tools represent impressive progress in AI-powered research capabilities. But they have significant limitations in judgment—both in knowing when to persist through obstacles and when to adapt their strategy.

For users: Understand that these tools work best on straightforward information synthesis tasks. For complex research requiring rigorous accuracy, human oversight remains essential.

For researchers: The gap between "sometimes works impressively" and "reliably solves complex problems" remains substantial. Building agents that know when to persist and when to adapt is a key frontier in AI research capabilities.