Key Takeaways

- OpenAI is experiencing the fastest revenue growth in tech history, scaling from $1B in 2023 to $3.7B in 2024 (270% growth) and an estimated $8B ARR in March 2025, growing at 200% annualized.

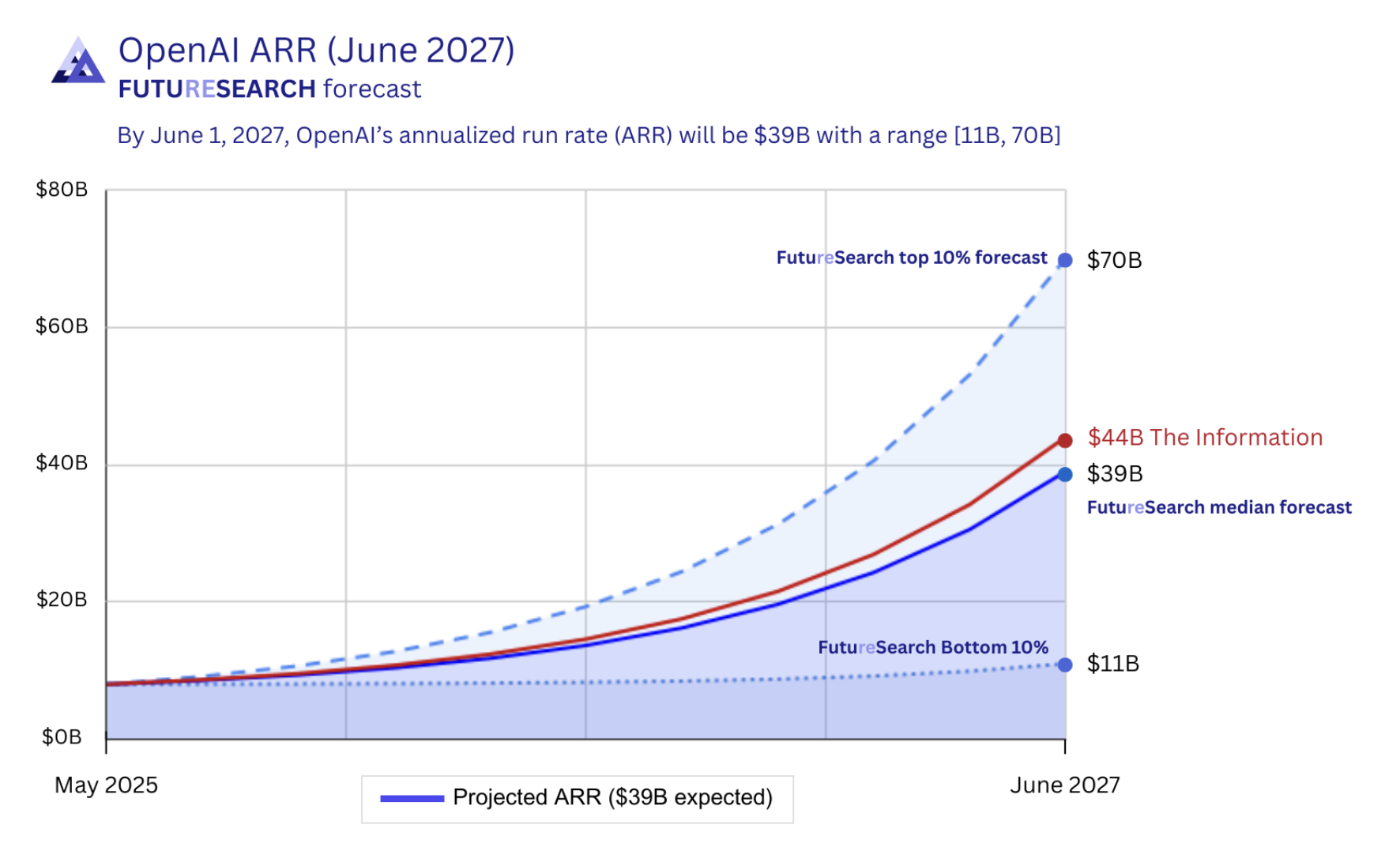

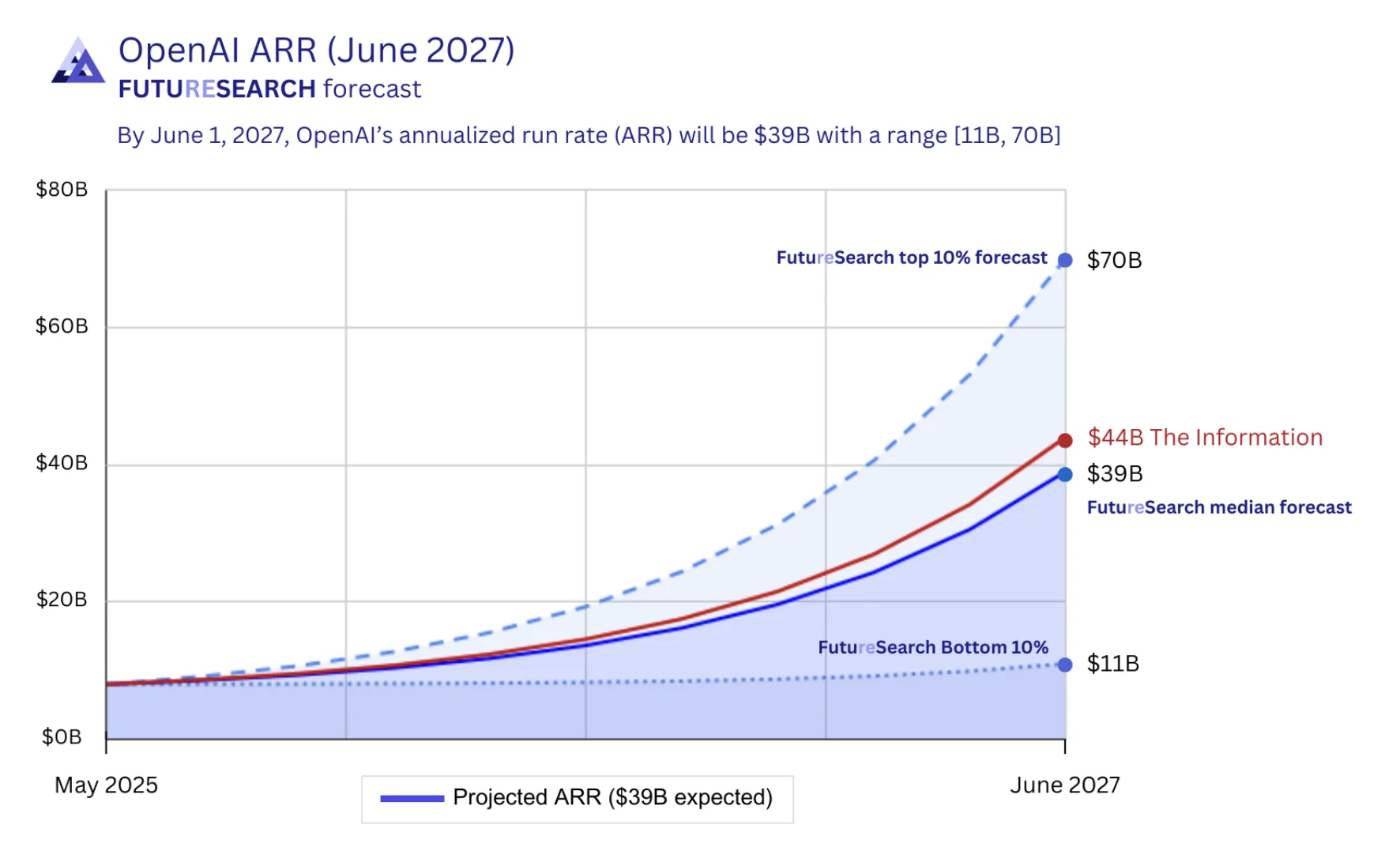

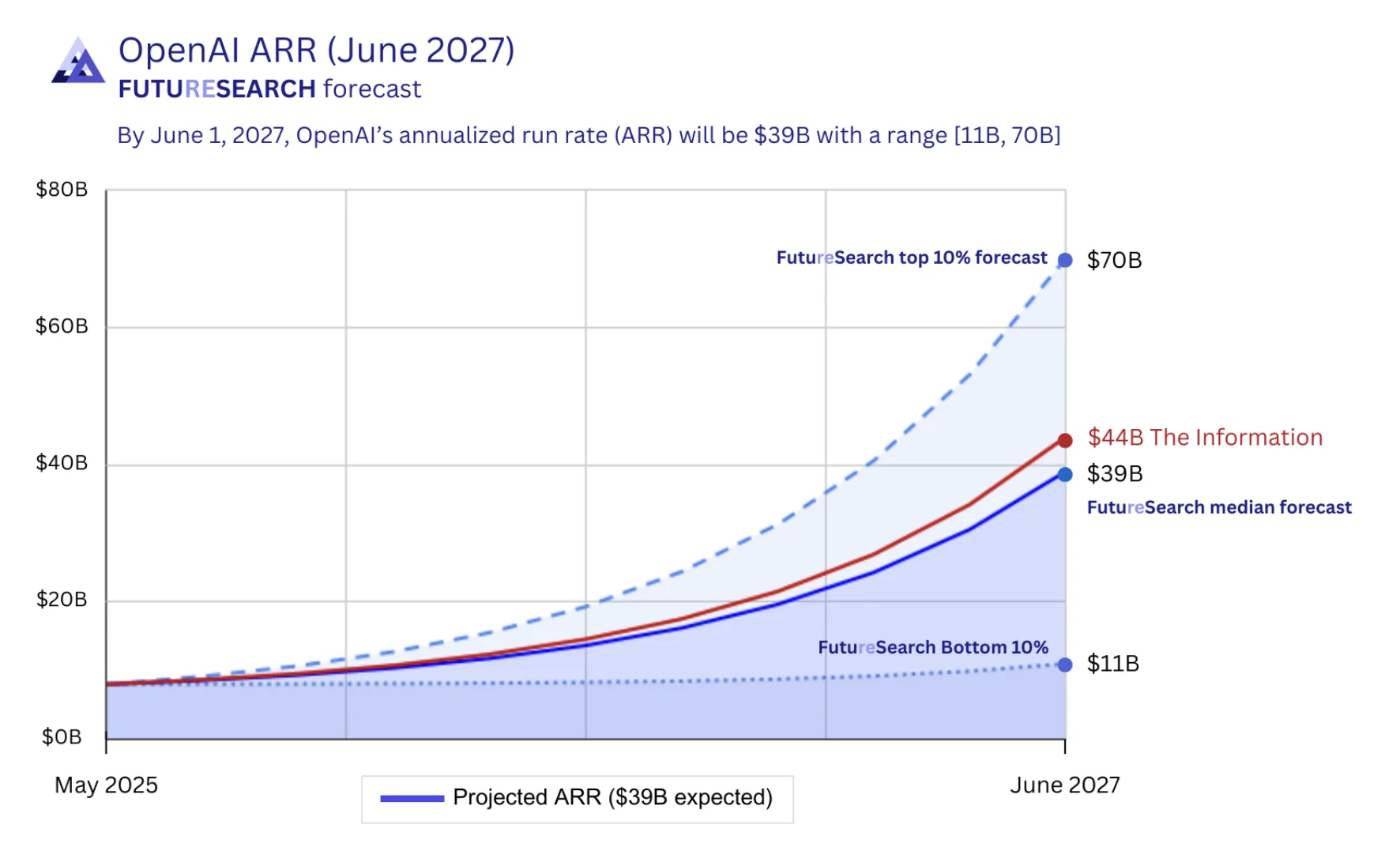

- Our forecast: $39B ARR by mid-2027, with an 80% confidence interval of [$11B, $70B]—an extraordinarily wide range reflecting unprecedented market uncertainty.

- Consumer subscriptions dominate revenue (85% of ARR), growing from 2M paid users in July 2023 to 15.5M by January 2025, with ChatGPT Pro adding $300M annually.

- Enterprise adoption is accelerating even faster, surging from 150K users in January 2024 to 2M+ by February 2025—enterprise AI spending grew 500% in 2024.

- API business faces commoditization: Despite $510M in annual revenue, prices are decreasing exponentially due to intense competition and low switching costs.

- Success hinges on AI agents: Our 90th percentile scenario ($70B ARR) requires autonomous agents that can replace human workers in customer service, software engineering, and research—a highly uncertain bet.

- OpenAI is hemorrhaging money: losses grew from $540M (2022) to $1.5B (2023) to $5B (2024), with projections of $44B in total losses before profitability in 2029.

- The Microsoft relationship is deteriorating: Microsoft is developing in-house models to replace OpenAI, partnering with Meta, and investing in rivals like Inflection AI.

- Competition is intensifying: Claude 3.5 Sonnet leads on coding benchmarks, Gemini 2.0 Flash matches GPT-4o at 4x lower cost, and xAI raised $6B.

- Talent exodus poses existential risk: Key leaders including Ilya Sutskever (co-founder), Andrej Karpathy (founding team), and top safety researchers have departed, with many joining competitors like Anthropic and xAI.

- The 10th percentile scenario ($11B ARR) is plausible: If Microsoft competition materializes, talent drain accelerates, and data center capacity constrains growth, OpenAI's revenue could peak in 2026 and decline by 2027.

OpenAI's Unprecedented Growth Trajectory

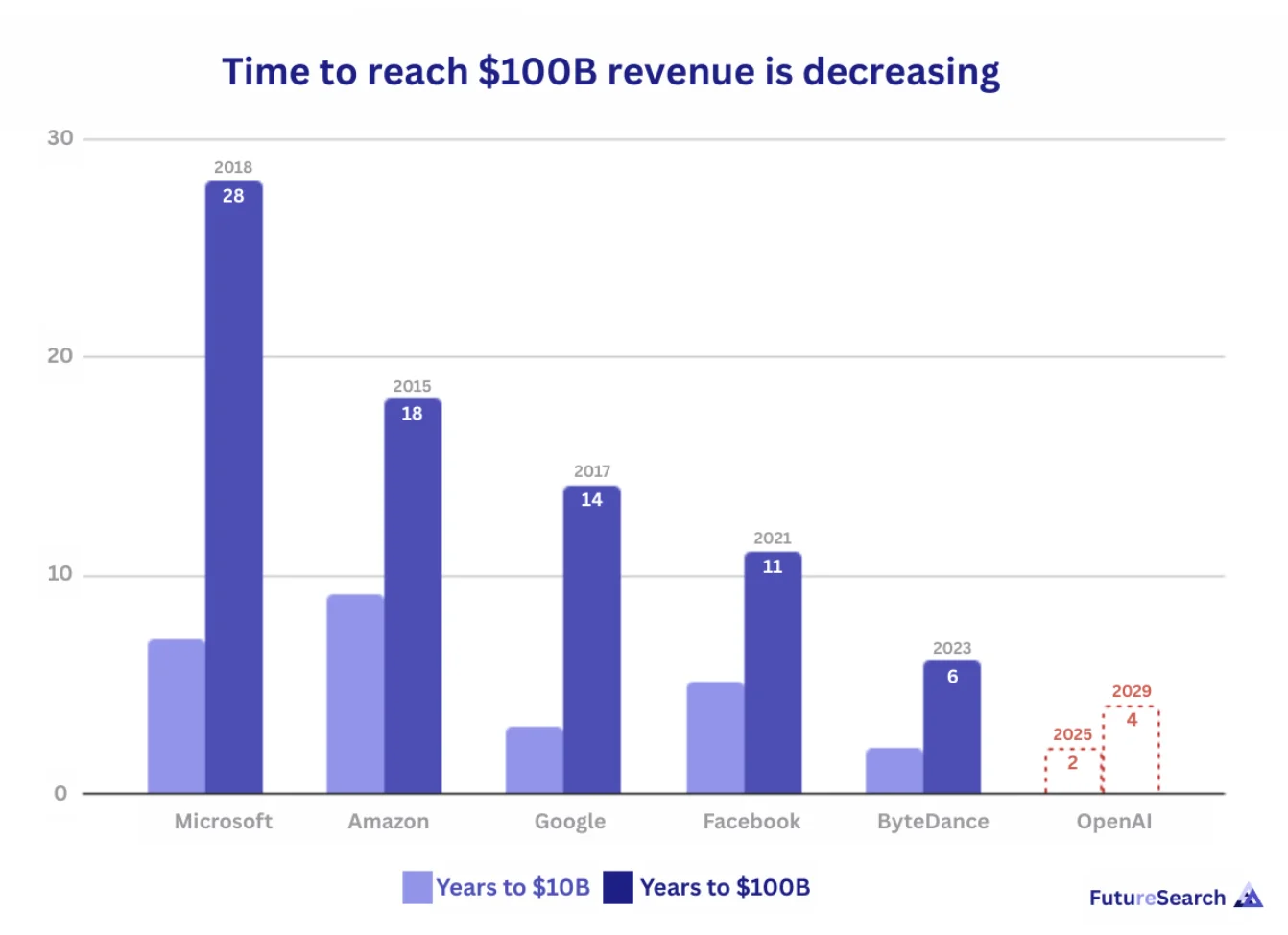

OpenAI is experiencing the fastest revenue growth of any major technology company in history. The company grew from $1B revenue in 2023 to $3.7B in 2024—a 270% increase—likely the fastest growth rate ever achieved by a company at that scale.

To put this in perspective, the time required to scale from $1B to $100B in revenue has been decreasing by roughly 40% per decade:

- Microsoft: 28 years (1990 → 2018)

- Amazon: 18 years (1997 → 2015)

- Google: 14 years (2003 → 2017)

- Facebook: 11 years (2009 → 2021)

- ByteDance: 6 years (2017 → 2023)

- OpenAI (projected): 4 years (2023 → 2027)

If OpenAI continues this trajectory, it will reach $10B in revenue in 2025 and potentially $100B by 2027—faster than any company in history.

Current Revenue Estimates

Our estimates for OpenAI in March 2025: $8B ARR growing at approximately 200% annualized (Appendix A). The key data points are Sam Altman's statement that ARR was $3.4B in June 2024, recent statements by the CFO indicating $3.7B in revenue for 2024, and a recent statement that they expect to reach $12.7B in revenue in 2025

We estimate ARR of $6B in December 2024 with $3.7B in revenue for all of 2024. Note that revenue for a particular calendar year is substantially less than the ARR at year-end due to rapid growth.

Revenue Breakdown: Where the Money Comes From

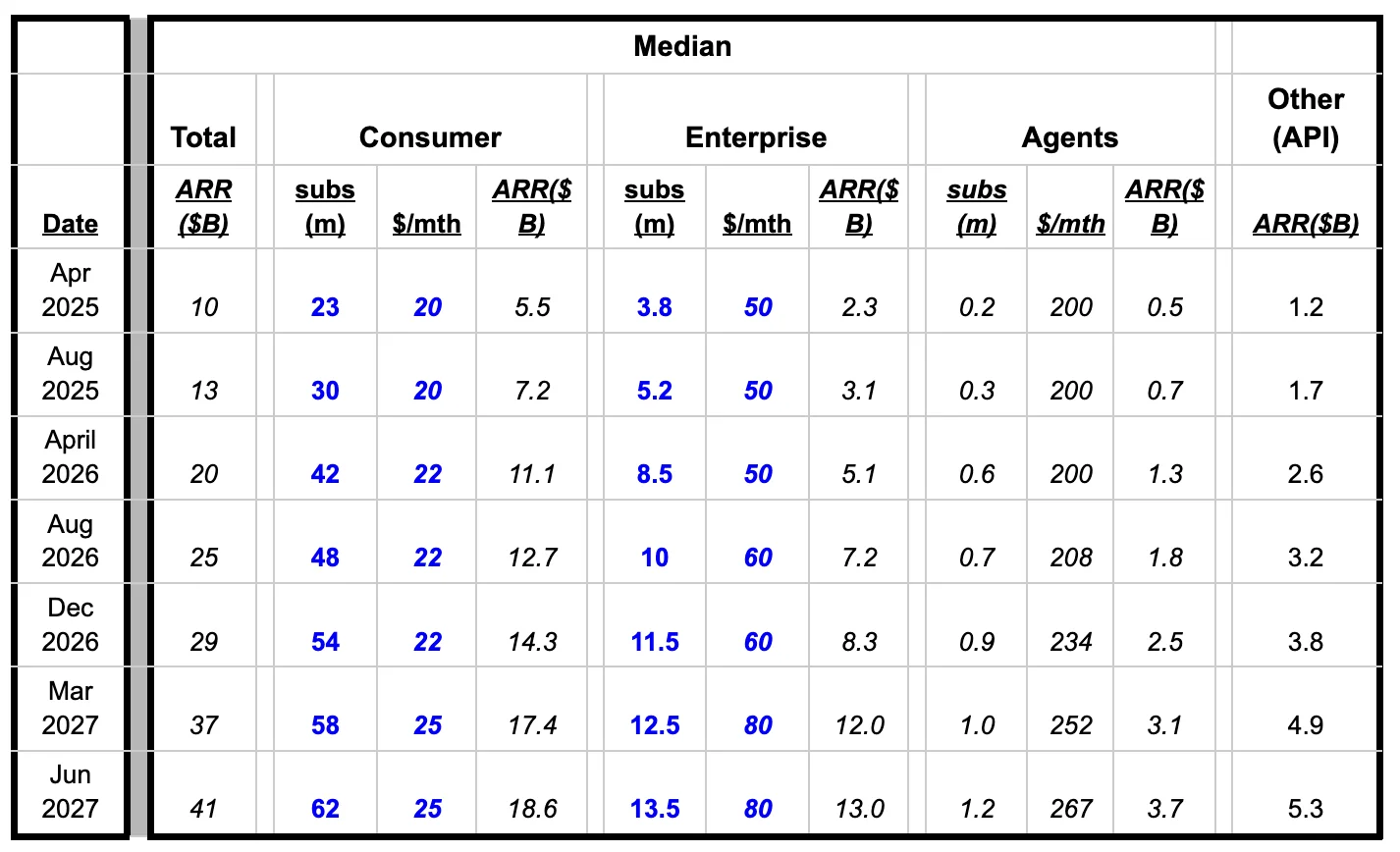

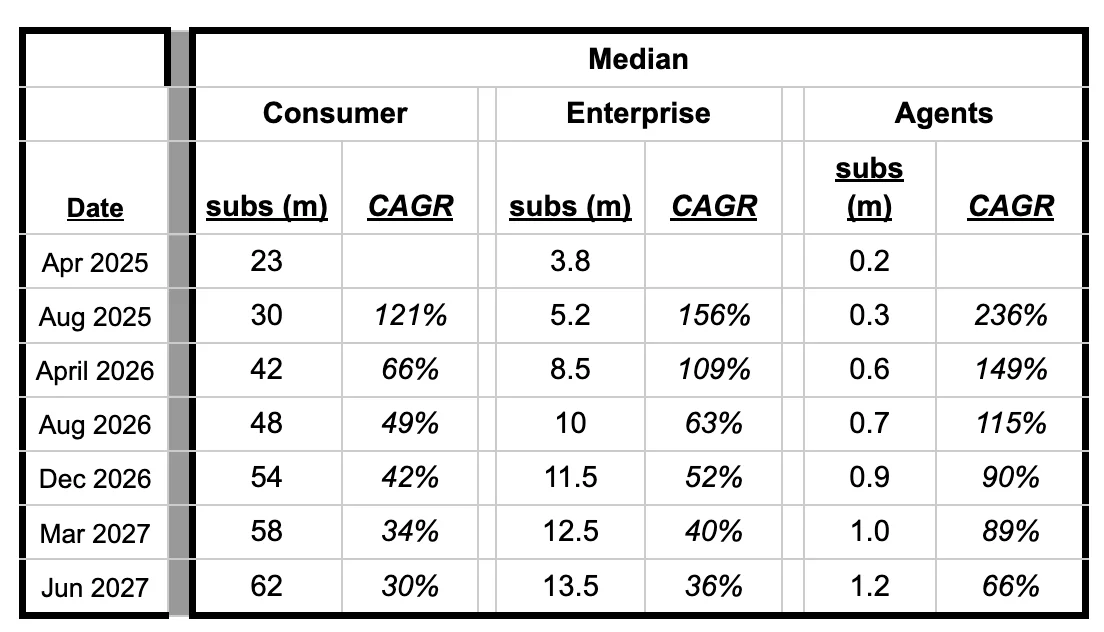

We model OpenAI's revenue across four main categories: Consumer subscriptions, Enterprise deployments, AI Agents, and API/Other. Our median forecast shows significant growth across all segments:

Consumer Subscriber Growth

ChatGPT consumer paid subscribers have grown from 2M in July 2023 to 3.9M in March 2024 to 7.7M in June 2024 to 10M in Sept 2024 to 15.5 by Jan 2025

Sam Altman recently tweeted that OpenAI added 1M users in one hour spurred by high demand for their new image generation feature. If current growth continues, ChatGPT could reach over 30M subscribers by August 2025.

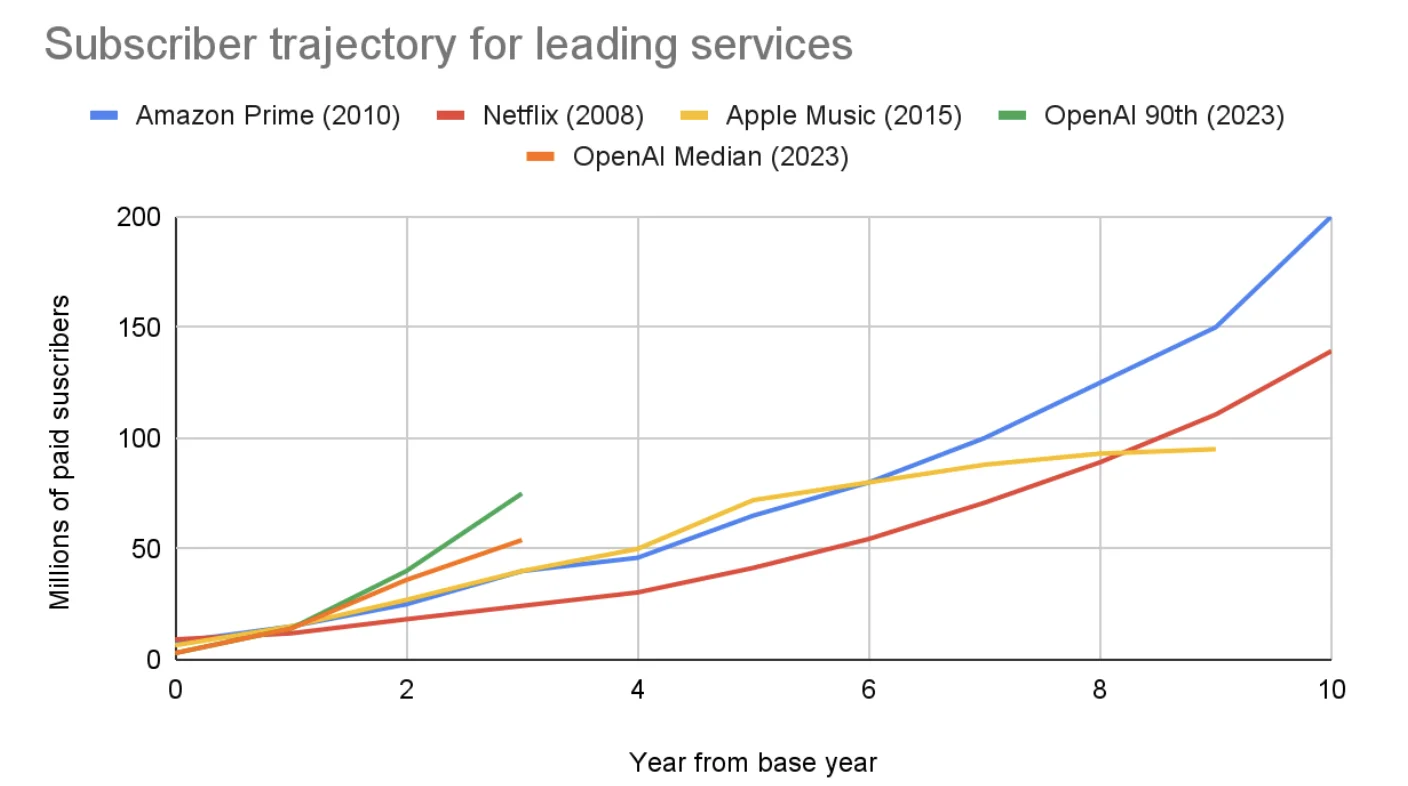

As a reference, the graph below shows the paid subscriber count for some of the most popular subscription services ever. Even in our median scenario, ChatGPT subscriptions increase to 62M by mid-2027—faster growth than Amazon Prime, Netflix, or Apple Music when they were at similar scale.

The revenue growth is even more impressive when compared from the year each company hit $1B in revenue. The ~200% annual growth rate of ChatGPT is noticeably higher than other successful subscription services.

Enterprise Subscriber Growth

ChatGPT Enterprise paid subscribers have grown from 150,000 in January 2024 to 600,000 in April 2024 to 1.2M in June 2024 to 2M+ in February 2025.

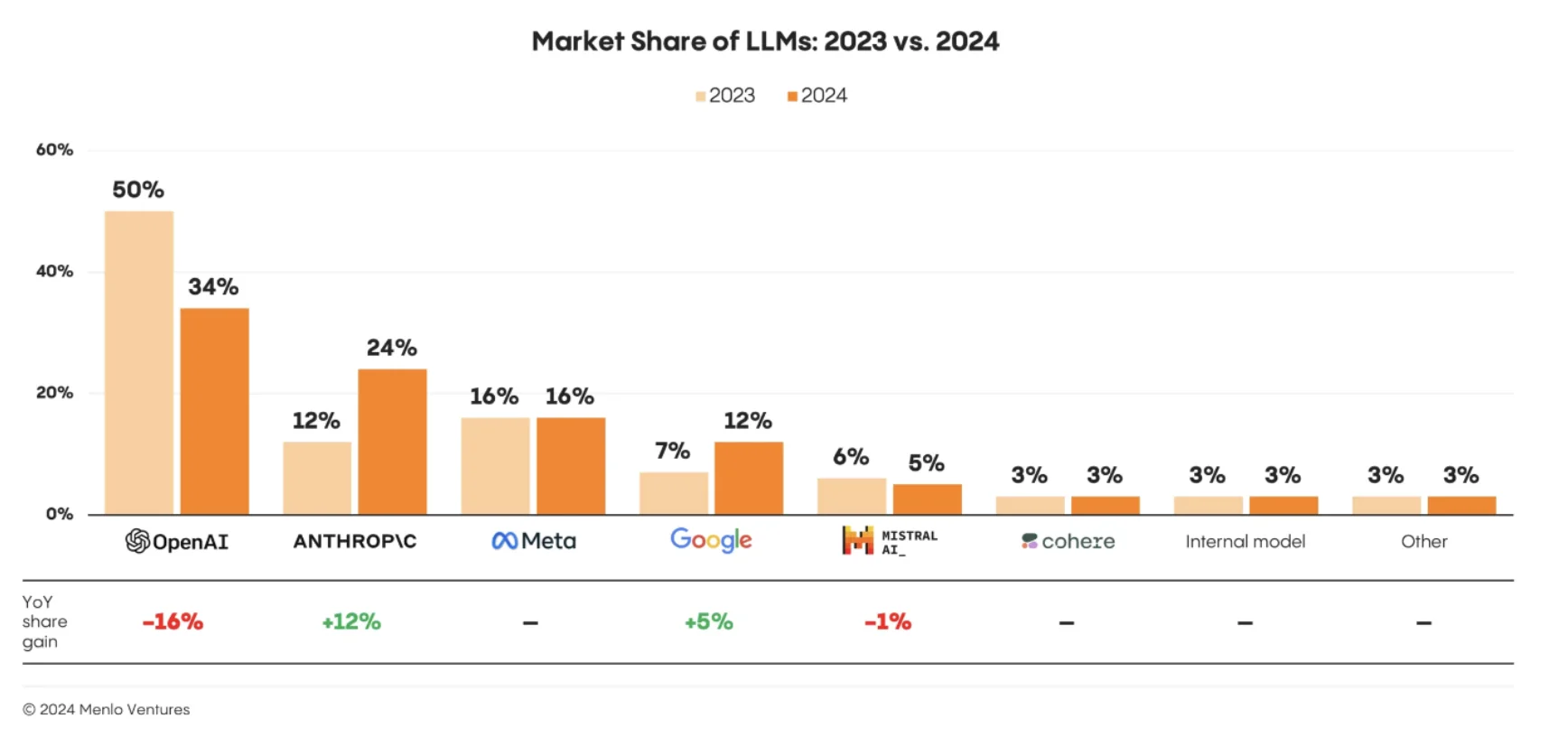

The enterprise growth rate may be higher than the consumer growth rate. MenloVC estimates that "AI spending surged to $13.8 billion [in 2024], more than 6x the $2.3 billion spent in 2023" corresponding to a 500% annualized growth rate. Microsoft reported AI revenue of $13B ARR in Feb 2025, up from $10B 3 months earlier, a 185% CAGR.

Our trajectories for Enterprise are much higher than other successful companies when they hit $1B in revenue. However, this reflects the tremendous growth that OpenAI has already demonstrated. Our 90th percentile scenario has enterprise growth moderating to 52% by mid-2027, with our median forecast at 36%.

For reference, Oracle and Salesforce—two of the biggest Enterprise software providers—have over $50B and $35B in annual revenue respectively. From this perspective, the $9B enterprise ARR by 2027 in our median scenario is aggressive but not unrealistic.

API Revenue Growth

Estimates suggest the API business was generating approximately $510 million annually by mid-2024—around 15% of OpenAI's ARR. Despite this growth, internal projections and industry analysis suggest OpenAI expects API revenue to be outpaced by both subscription-based products (like ChatGPT Plus, Team, and Enterprise) and emerging offerings such as AI agents. By mid-2024, subscriptions accounted for approximately 85% of OpenAI's ARR. Nevertheless, the API remains a strategically important component of OpenAI's business, offering scalable, usage-based income and enabling a wide ecosystem of applications across industries.

OpenAI's API by the numbers:

- June 2024 API Total Revenue: $41.2M

- Gross profit margin (from revenue and inference costs): 75% (June 2024)

- June 2024 API ARR: $510M; 15% of total OpenAI ARR

As of late 2024, API usage reached 1.4 billion tokens per minute, up dramatically from 200 million at the start of the year.

A significant challenge: API prices are exponentially decreasing as competition intensifies. Currently, switching API providers is easy for companies relying on LLM APIs. This creates pricing pressure and customer concentration risk.

In our breakdown, we model API as a fixed percentage of OpenAI's overall revenue, growing to $5B by mid-2027 in our median scenario.

Assistants & Agents: The Wild Card

OpenAI launched ChatGPT Pro in Dec. 2024 designed for anyone who uses "research-grade intelligence daily to accelerate their productivity." The offerings include Deep Research and Operator – a research preview feature that can use a built-in web browser to perform tasks for users (e.g. booking travel, buying groceries) autonomously.

There are at least 125,000 ChatGPT Pro subscribers with Pro bringing in at least $25 million a month or $300 million a year. ChatGPT Pro sales accounted for nearly 5.8% of OpenAI's Consumer sales as of Jan 1, with the remaining 94.2% share for ChatGPT Plus.

Taking a step beyond Deep Research, OpenAI has already talked about charging $20,000 per month for "PhD-level research" replacement workers that are semi-autonomous agents capable of fully replacing humans for some existing job. Reports are that SoftBank plans to spend $3B on AI agents and other products from OpenAI. Low-end agents are projected to cost as much as $2,000 per month while mid-tier agents for software development could cost $10,000 a month.

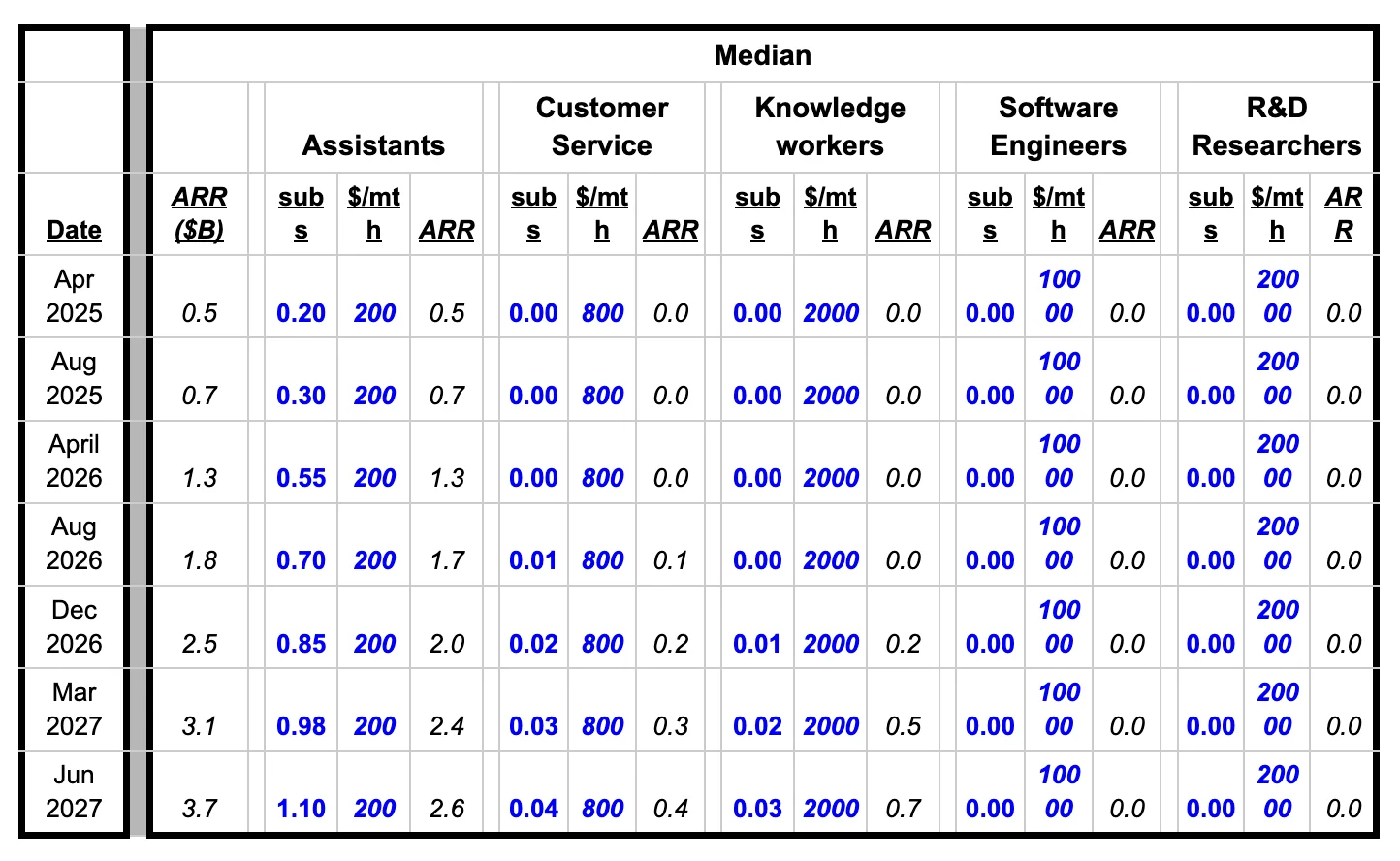

While agents represent huge potential upside, they are largely unproven and speculative. The table below shows where revenue might come from in an optimistic scenario. We break down agent revenue into these categories: Assistants (including Deep Research), Customer Service, Knowledge workers, Software engineers, and R&D researchers.

We envision assistants as requiring a human to oversee, similar to Deep Research. The other agent categories we treat as effectively replacement workers with correspondingly higher price points. The table below shows our 90th percentile forecast.

In our median scenario, agents only provide $3.7B in ARR by mid-2027, consisting primarily of assistants like Deep Research with minimal penetration of autonomous replacement workers. Our median forecast assumes no AI software engineers or R&D researchers by mid-2027—only 1.1 million subscribers paying $200/month for assistants like Deep Research, plus nascent customer service and knowledge worker agents.

Part 2: Major Headwinds

OpenAI is Losing Lots of Money

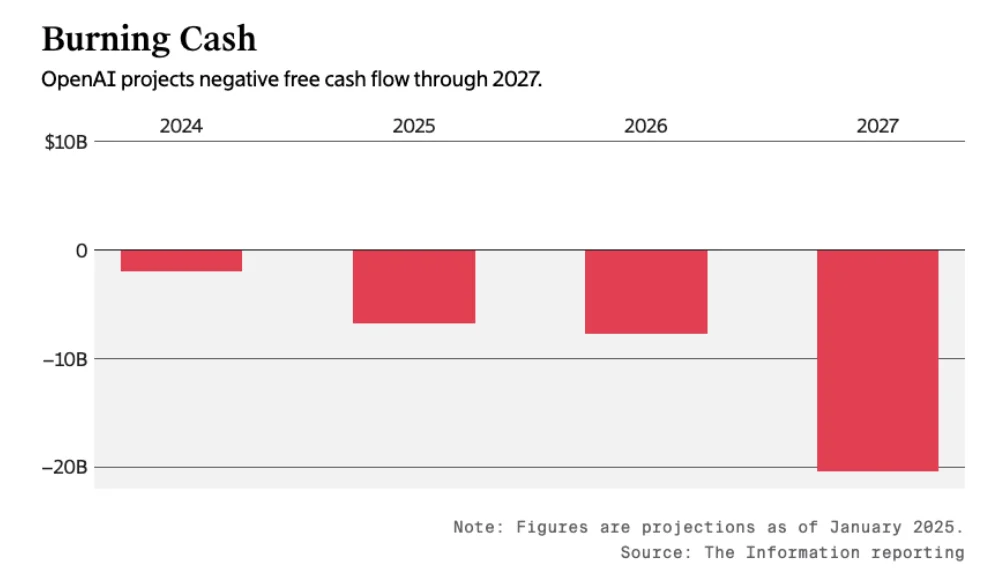

In 2022, OpenAI lost $0.54 Billion; in 2023, they lost an estimated $1.50B; and in 2024 $5.0B. This is expected to greatly steepen, even factoring in exponential revenue growth.

In total, OpenAI projects it will lose $44 Billion before turning a profit in 2029. These losses are not truly peerless: Uber lost $31.5B before turning a profit, after all. But it does require a vast amount of investment.

The Microsoft Conundrum

Microsoft's investment is largely Azure credits, not cash. Going all the way back to Microsoft's first $1B investment in 2019, and through their ~$2B of infusions in 2020-2022, and their ~$10B multi-year investment in 2023, Microsoft's reports and company disclosures make clear that Microsoft's payments to OpenAI were largely in-kind. Semafor reports that only "a fraction" of this investment was cash.

The majority of this investment was in fact building datacenters, providing GPU capacity for large training runs, and hosting inference. None of this type of investment is cash that can cover OpenAI's losses, although it does directly defray cash costs that OpenAI would otherwise have to pay.

Even more challenging for OpenAI's balance sheet and cashflow is that the investment included revenue-sharing agreements. Much of OpenAI's API traffic comes from Azure, and Microsoft gets a large portion of that, potentially as high as 80%. OpenAI cannot move to other clouds as it has signed exclusivity – renewed in 2025 – with Azure as the only cloud OpenAI provider (though this may have been relaxed in 2025 if Azure cannot scale to meet OpenAI's needs, and OpenAI can turn to Oracle). The original exclusivity agreement was modified in Jan 2025 so that OpenAI may use other company's data centers, but Microsoft has the right of first refusal.

Any investors or regulators reviewing OpenAI's books would expect to see the Microsoft Azure credits recorded as both income and expense. Microsoft is essentially paying itself – providing OpenAI prepaid access to Azure rather than handing OpenAI cash to spend elsewhere. (It treats the Azure credits as deferred payment for OpenAI's IP, aligning with how ASC 606 handles non-cash consideration.)

Yet a further challenge for future cashflow is lock-in. Microsoft has the right to use OpenAI models in Microsoft products like Github Copilot, making it harder for OpenAI to charge monopoly prices even if it does have the best AI offerings. And since Azure is the exclusive cloud platform for the OpenAI API, it may And OpenAI must use Azure, which could be if it does have a unique tech advantage.

A final concern is the effect of Microsoft's terms with other investors. Microsoft takes 75% of all OpenAI profits until its investment is recouped and 49% of profits until it hits $92B. While OpenAI is many years away from profitability, this provision might still discourage other investors and it could potentially slow OpenAI's growth since cashflows will need to be diverted to paying Microsoft rather than reinvesting in its business might slow .

Microsoft Could Squeeze OpenAI

Microsoft holds the cards and could alter terms to immediately squeeze OpenAI's cashflow—by reducing credit subsidies or delaying further infusions.

Recently, Microsoft has been preparing to directly compete with OpenAI:

- It is maintaining its own branding, e.g. Copilot and Bing, rather than the GPT brand

- Microsoft is developing its own in-house models, and testing them as replacements for OpenAI models in Microsoft 365 copilot

- It is partnering with OpenAI competitors like Meta, hosting Llama models on Azure

- It is investing in OpenAI competitors like G42 and Inflection AI

Anti-trust could be a concern too. In early 2025, the U.S. FTC published a report flagging that cloud providers tying up AI start-ups (as Microsoft did with OpenAI) might create "lock-in" and unfair advantages, citing Microsoft's $13B OpenAI deal as a key example. The European Commission decided not to investigate, but could change their mind if the partnership expands. Even the threat of this could cause Microsoft to unwind Azure subsidies and cause OpenAI to need to pay much more for their backend.

Investor Sentiment Concerns

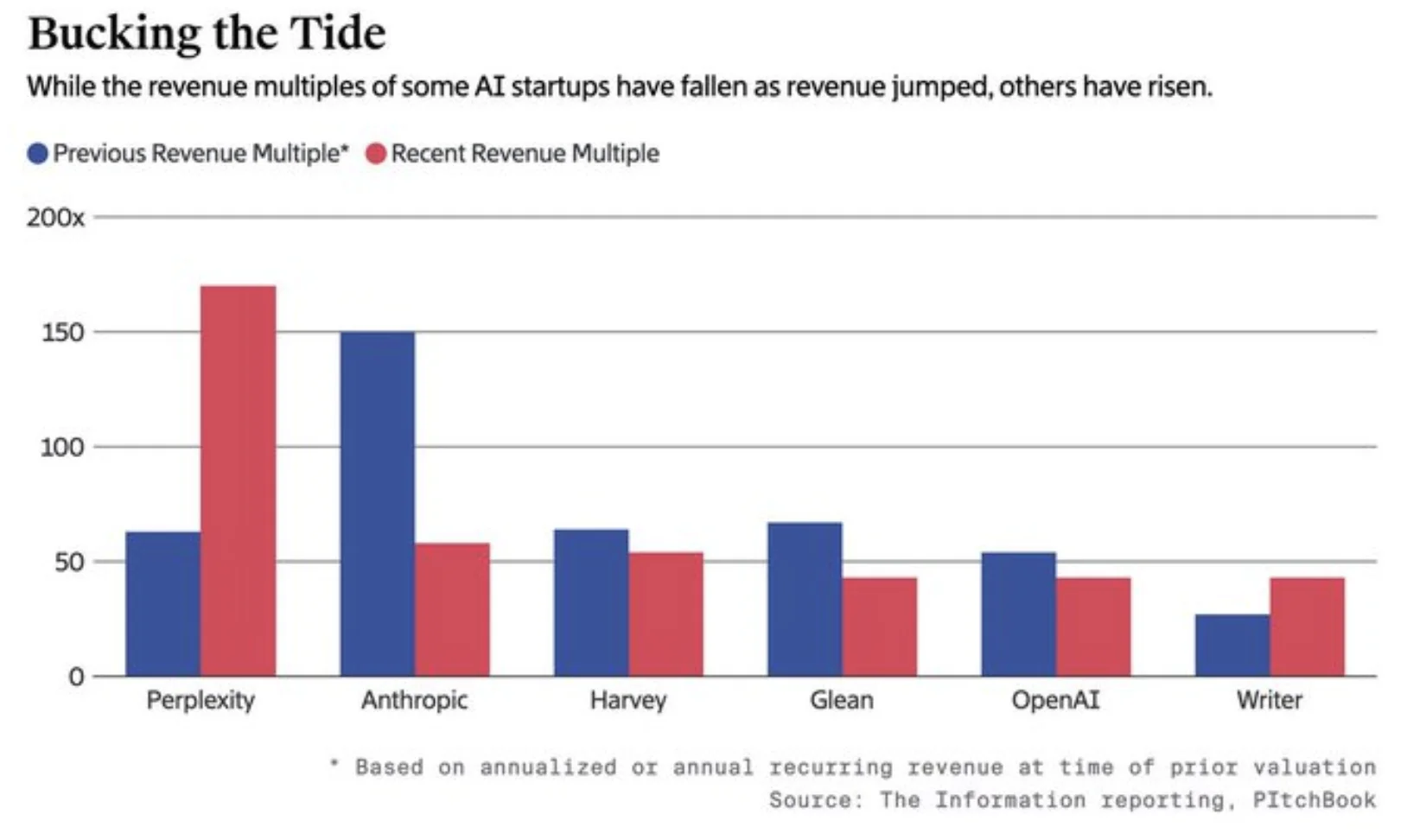

According to The Information, revenue multiples of most major AI companies have fallen in recent rounds, including OpenAI:

OpenAI's recent $6.6B round at a $157B valuation was bolstered by an overall expansion in venture capital investment in generative AI startups nearly doubled in 2024, reaching about $56 billion globally (up 92% from 2023), with new involvement from sovereign wealth funds and other late-stage capital investors.

OpenAI's fundraising plans may be predicated on the continuation of this trend, which is uncertain.

One particular systemic risk factor is access to data. Dozens of major copyright lawsuits are pending against companies like OpenAI, Google, Meta, Microsoft, and Stability AI, alleging that their models unlawfully used copyrighted text, code, or images in training datasets. A landmark ruling, or odd behavior from one of the governing AI bodies, cool suddenly the entire industry.

There are also more prosaic reasons why investor enthusiasm could fall:

- OpenAI in particular has continued scaling their systems at inference-time, rather than training time, which makes the models an order of magnitude slower, preventing this type of usage from being used in many applications

- Customers concentration is high, so a few key customers dropping large GenAI contracts (or simply shifting spend from OpenAI to a competitor) could spook other customers from committing to large contracts

- Very large amounts of equity and debt are susceptible to sudden increases in interest rates

- Clashes between the US and China could lead to more restrictive domestic US policy that interferes with the scaling of US AI companies (though it could also enhance it.)

Catastrophic Talent Drain

Research talent is arguably the most scarce resource in AI, more than GPUs or dollars or brand mindshare. OpenAI has had a poor track record of retaining their top talent, even compared to the baseline of tech companies that rarely command loyalty. And in fact some of their biggest competitors came straight from OpenAI.

Tracking the talent is relevant both for understanding where the most brilliant thinkers are currently working, but also for predicting future loyalty.

In fact, OpenAI has one of the worst track records of retaining talent of any tech company in recent history. Only two of the 11 founding members are still at the company. (And one of them, Greg Brockman, co-founder and President, also took a multi-month sabbatical right as OpenAI's supremacy in model quality began to waver.) [Missing someone?]

The first significant wave of departures occurred in 2021, where 8 core staff members left to found Anthropic, which produced the first non-OpenAI model which built up a cadre of users loyal to it over ChatGPT, and as of March 2025 arguably has single best overall foundational model, Claude-Sonnet-3.7. The talent that left OpenAI to make this possible at Anthropic include:

- Dario Amodei (VP of Research)

- Daniela Amodei (VP of Safety & Policy)

- Jack Clark (Policy Director)

- Sam McCandlish (Research Lead)

- Tom Brown (Member of Technical Staff, led GPT-3 engineering)

Since 2021, dozens of other OpenAI researchers have left to join Anthropic, most notably OpenAI cofounder John Schulman in 2024 (see below).

The second significant wave of departures occurred after the failed sacking of Sam Altman by the Board of Directors in November 2023. The most prominent recent departures have been:

- Andrej Karpathy, co-founder (Feb 2024)

- Ilya Sutskever, co-founder and chief scientist (May 2024)

- Jan Leike, Head of Alignment (May 2024)

- John Schulman, co-founder (Aug 2024)

- Mira Murati, CTO and co-founder (Sep 2024)

- Bob McGrew, chief research officer (Sep 2024)

- Barrett Zoph, VP of Research (Sep 2024)

- Tim Brooks, research co-lead, Sora (Oct 2024)

- Luke Metz, o1 model leader (Oct 2024)

- Jonathan Lachman, head of special projects (Nov 2024)

- Lilian Weng, VP of Research and Safety (Nov 2024)

- Alec Radford, GPT-1 architect (Dec 2024)

- Liam Fedus, VP of Research (Mar 2025)

Notably, in addition to Anthropic, several of these leaders have formed direct OpenAI competitors:

- Chief Scientist Ilya Sutskever left to found SSI along with fellow OpenAI engineer Daniel Levy, which raised $2B at a $30B valuation

- Mira Murati left to found Thinking Machines, which poached an additional 20 OpenAI researchers, including Luke Metz who led creation of the o1 model and Alec Radford who created GPT-1.

- Igor Babushkin (who left OpenAI in 2022 to join DeepMind) founded xAI with Elon Musk

And Ian Goodfellow left to re-join Google at DeepMind.

Another significant effect of the board's firing of Sam Altman was a replacement of the existing board with a new board. This means that Elon Musk, Reid Hoffman, Trevor Blackwell, and other tech luminaries are no longer helping OpenAI succeed.

The Capabilities Race: OpenAI is No Longer Dominant

Benchmarks Tell the Story

(data from Epoch's benchmarking dashboard, from which pre-July-2024 data can be downloaded.)

Throughout 2023, OpenAI maintained its lead. Claude 2, released months after GPT-4, was slightly better on the GPQA benchmark (0.35 vs 0.31) but much inferior on MATH (0.12 vs 0.23.)

Subsequent updates in Nov 2023, Jan 2024, and Apr 2024 brought significant gains: GPQA scores rose to the 0.42-0.47 range, and MATH scores jumped to 0.35-0.47. However, competitors rapidly closed the gap: Claude 3 Opus, released February 2024, achieved comparable scores.

The demise of OpenAI's supremacy came with the release of GPT-4o in May 2024. Its GPQA of 0.49 and MATH of 0.52 were rapidly overtaken by:

- Claude 3.5 Sonnet: Higher GPQA (0.54) and equal MATH scores—the first non-OpenAI model to build a cadre of users loyal to it over ChatGPT

- Meta's Llama-3.1-405B: Competitive on both benchmarks

- Google's Gemini 1.5 Pro: Much superior MATH performance (0.70) and slightly better GPQA (0.57)

By the end of summer 2024, OpenAI's procession to its coronation had become a four-horse race, and they were no longer in the lead. In autumn 2024 they retook pole position once again with their innovative "reasoning models" o1 and o3.

The release of DeepSeek R1 in January 2025 has been widely referred to as "AI's Sputnik moment." R1 was competitive on benchmarks, performing similarly to early/mid o1 on GPQA and MATH, albeit lagging behind the later o1 and o3 models on OTIS AIME. One month later, Claude 3.7 Sonnet with Thinking similarly matched o1/o3 on GPQA and MATH. To that extent, OpenAI's lead lasted only a few weeks.

That said, on other benchmarks, notably OTIS Mock AIME and especially FrontierMath, OpenAI's o3 and o4-mini (high) remain the state of the art; and while GPT-4.5 is not state-of-the-art on benchmarks, it is state-of-the-art, often by some distance, among non-reasoning models. These advantages should not be minimized — but two years ago their flagship model was unrivaled, while today they have a complex suite of models and a nuanced set of technological advantages. It's hard to interpret that as anything but a significant diminishment.

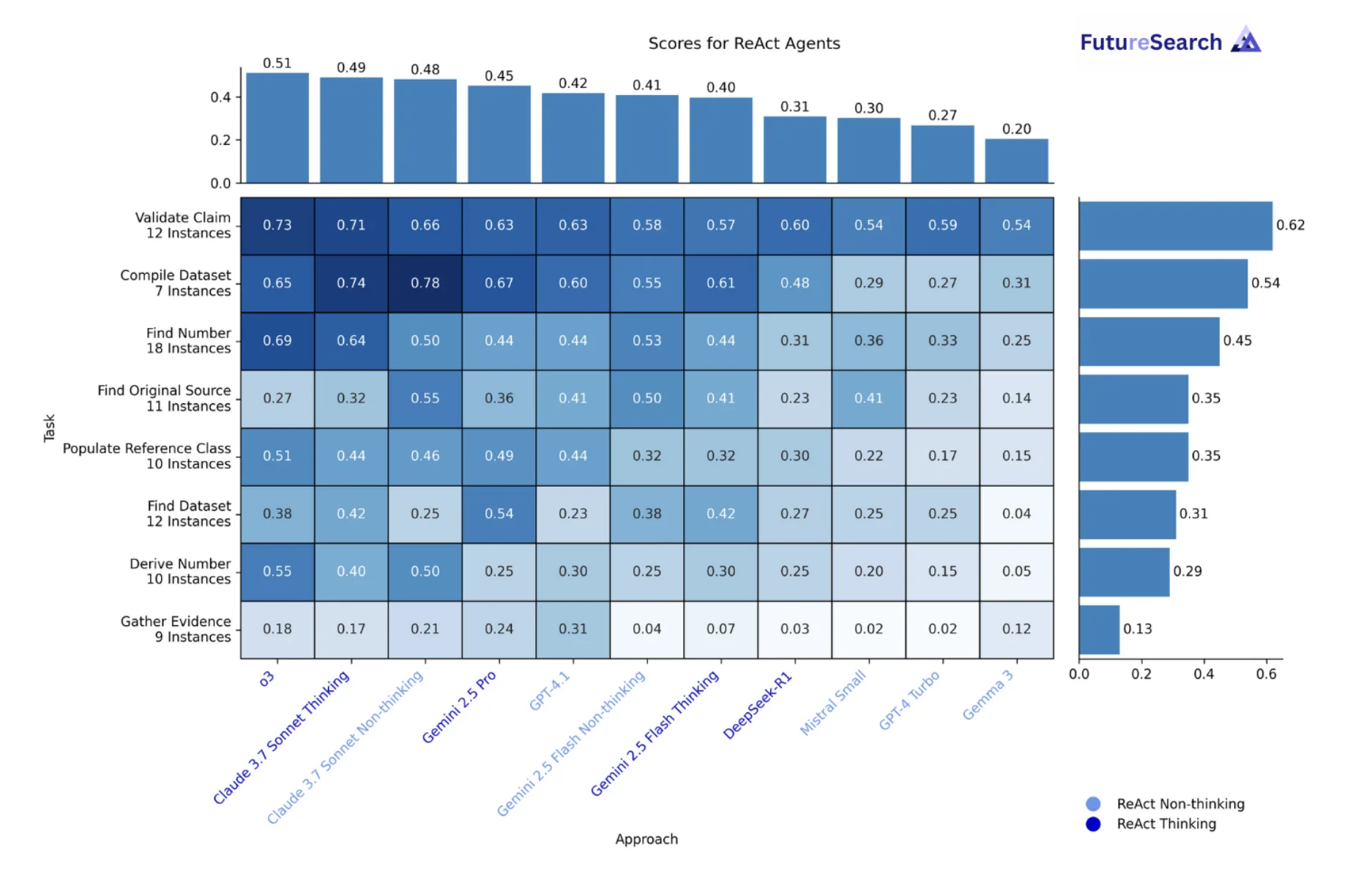

As of May 2025, FutureSearch's new Deep Research Bench shows that ChatGPT-o3+search (though oddly, not OpenAI Deep Research) is way out in the lead in agentic web research tasks, e.g. the default when using ChatGPT with o3.

Price vs. Performance: OpenAI is Mid-Tier

OpenAI is at best mid-tier on price per token combined with quality. Its flagship models are all more expensive than rivals when measured by benchmark performance—even GPT-4o is pricier than Claude Sonnet 3.7.

Its "speed" models (o3-mini, o4-mini) cost substantially less than Sonnet, but DeepSeek-R1 is much cheaper yet, and Google's Gemini Flash undercuts even DeepSeek.

LMSys Arena: Falling Behind

The Chatbot Arena, previously known as LMSys arena, quantifies human preferences for individual LLMs using an ELO rating system. As with benchmarks, OpenAI maintains its ability to take the lead here when it releases new models, but struggles to retain them; as of March 2025, GPT 4.5 Preview—released only a month ago—has already fallen to third place, behind Grok-3 and Gemini 2.5. (Which may retain its lead for some time; its 40-point advantage is a significant gap.)

OpenAI currently has three models in Chatbot Arena's top 10: 4.5 in 3rd place, GPT-4o-latest (January 2025) tied for 5th, and o1 tied for 8th. By contrast, Google has four models in the top 10, Grok has two, and DeepSeek one.

Outside of the top 10, OpenAI's models score well, claiming slots at 11, 13, and two (o3-mini and o1-mini) tied at 16th, while interleaved with models from Alibaba, Deepseek, Cohere, and even StepFun (a relatively obscure Chinese company) along with Google models and Anthropic's flagship Claude 3.7 Sonnet. In general Chatbot Arena reinforces the conclusion drawn from the benchmarks: OpenAI remains a strong competitor, but is no longer setting the pace, or even first among equals.

Competition: A Four-Front War

Anthropic: Winning the Coding War

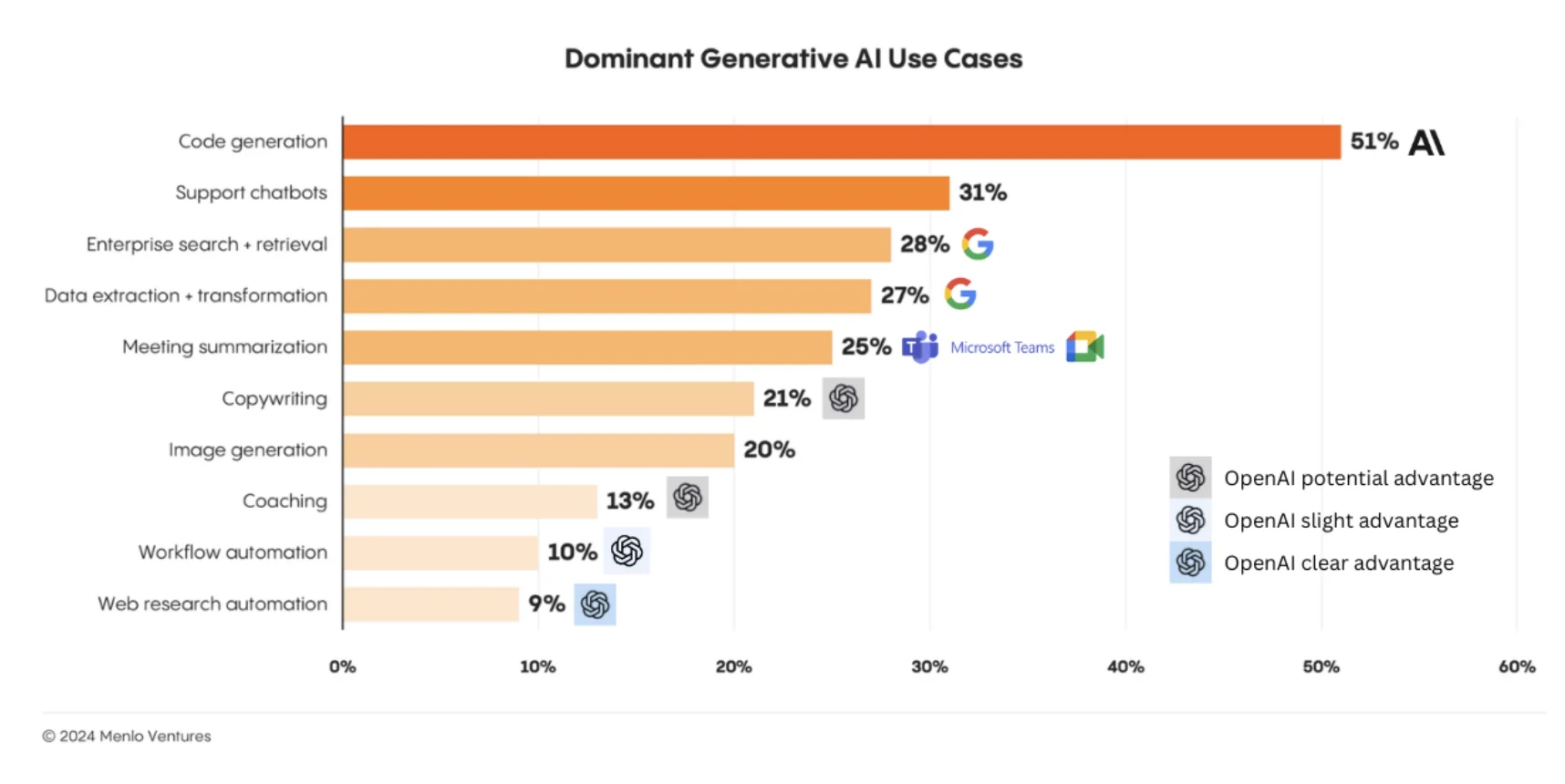

Anthropic poses a unique threat to OpenAI because they are focusing on one particular niche: coding. With code copilots making 51% of enterprise use-cases for GenAI in 2024 according to Menlo Ventures, Anthropic is rapidly eating away at ChatGPT's enterprise (and presumably, consumer-based coders) market-share:

Not only does this chart show how Anthropic poses a real threat in coding, but that companies are willing to try to use different models and different providers. This is a serious constraint on OpenAI's API revenue, which we will return to later.

Anthropic has also partnered with Amazon which—unlike OpenAI's relationship with Microsoft—has deepened recently, and includes use of AWS.

Google: The Sleeping Giant Awakens

Out of the Big-Tech companies or otherwise, Google is perhaps the most potent single threat to OpenAI at the moment. Despite lacking a unified front-end for all their models, and some being in beta or pre-release, Google has:

- Frontier models (Gemini Pro), capable with other SOTA models;

- Cheap, fast models (Gemini Flash), and open-source models (Gemma 3);

- Models with explicit Chain-of-Thought (CoT);

- AI Search Mode, ala Perplexity;

- A Data Science Agent;

- Project Astra, a universal (phone) assistant / agent;

- Project Mariner, a browser-based agent;

- Jules, a coding agent;

- NotebookLM;

- A video generation tool, VideoFX;

- An image generation tool, ImageFX;

- A music generation tool, MusicFX;

Google has also released features before OpenAI, e.g. Gemini Deep Research was available (in beta) on December 11, 2024, while ChatGPT's Deep Research was first available on February 2, 2025 (Grok's released on February 19, 2025).

And Google was the first to have a frontier model with a 1,000,000 token context window, and the first to 2,000,000. Meanwhile, OpenAI offers 128,000 for GPT-4.5 and 4o, 200,000 for o3-mini and o1, and 8,200 for GPT-4. By comparison, Claude 3.7 Sonnet has a context window of 200,000 tokens, and Grok claims 1,000,000, but it appears it might be closer to 128,000. While models fail to utilize their full context-window, efforts are being made to improve this.

At the same time, Google has an impressive infrastructure for GenAI in particular due to the overlapping demands of inference in search and GenAI. This is why while Colossus is technically the largest training cluster, Google has a distributed (3x data-centers in close geographic proximity) training network that is slightly more powerful (find OG source). Their network has been built over decades. This has resulted in Google's AI infrastructure being built not off of Nvidia or anyone else, but rather their own in-house TPU's which currently span (the relevant) generations of 7nm to 3nm chips. Google is set to spend $75B on capital expenditures in 2025, focusing on AI infrastructure, up from $29B in 2024.

Of course, Google has issues and perhaps these, in combination with OpenAI's incumbency advantage, will be enough to prevent Google from achieving GenAI success. Gemini launched horrifically; the model was historically the most censored, and is probably still trailing; Google's efforts are currently dis-jointed; and the company has a history of failing to see products through.

But as others have argued, GenAI poses a real risk to Google if they fail to get it right. The company is investing a lot of money, has cash and the opportunity to bundle GenAI in with other products (like xAI), and unlike OpenAI, has revenue streams such as Search to offset large losses and reach huge audiences instantly.

xAI: The Hardware Advantage

xAI is challenging OpenAI primarily in consumer ChatGPT space (Grok 3 doesn't yet have an API).

xAI is unique given its massive singular training center: Colossus. Colossus is made up of 200,000 H100 and H200 GPUs. Elon Musk plans to grow it to at least 1,000,000 GPUs. They seem on track to do this by the end of 2025 or early 2026. By comparison, Stargate is set to house 64,000 Nvidia GB200 chips by the end of 2026, with 16,000 by mid-2025, which would make it roughly 50% faster than Colossus is today by December 2026.

On the All-In Podcast, Chamath Palihapitiya put the dynamic of xAI well @ 1hr:

When you look at what XAI has done with respect to NVIDIA GPUs, the fact that they were able to get 100,000 to work, as you know, in one contiguous system and are now rapidly scaling up to basically a million over the next year, I think what it does, Jason, is it puts XAI to the front of the line for all hardware. So now, all of a sudden, if you were third or fourth in line, XAI is now first, and it pushes everybody else down. And in doing that, you either have to buy the hardware yourself or work with your partner. And I think for folks like Meta, that translates to and explains why they're spending so much more. It's sort of like an arms race: if you can't spend as much as my competitors, I'm just going to prefer my competitor to you. So I think that creates a capital war. In a capital war, I think the big companies like Google, Amazon, Microsoft, Meta, and brands like Elon will always be able to attract effectively infinite capital, and their cost of capital goes to zero, which means they'll be able to win this hardware war.

This dynamic is important. With OpenAI facing product delays due to compute capacity challenges and its hardware being run at near capacity, access to more GPUs is critical. Competitors like xAI and other Big-Tech players are a huge impediment on OpenAI's growth—as TSMC is the only current fab in town, with Intel still a ways away.

Moreover, while OpenAI has access to reddit.com's data, xAI has access to X.com's—not to mention xAI will seemingly have access to Tesla camera data, should multi-modal and in particular video learning be useful for improving models' general capabilities. Finally, much like Google, Meta, and soon-to-be-Microsoft, xAI's offerings can be directly embedded into a larger existing ecosystem, exposing it to millions of users (or billions in the case of Meta).

The New Entrants: DeepSeek, SSI, Meta, Alibaba, Microsoft

Finally, in the frontier model start-up space, DeekSeek cannot be ignored. While the true costs of its training are in dispute, and questions over potential distillation linger, DeepSeek presents tremendous pressure—if not threat—to OpenAI. By releasing cheap, state-of-the-art open-source models like DeepSeek V3 and R1, as well as many of the underlying tools necessary to train such models, DeekSeek is putting continual pressure on OpenAI's pricing. Not to mention, having state backing enables DeekSeek to put additional pressures on OpenAI that it otherwise wouldn't be able to afford.

Meta, Alibaba and Microsoft

Despite Meta's core role in GenAI's development, the company has seemingly missed the post-ChatGPT train. Zuckerberg appears determined to change that this year. Meta is planning to release Llama 4 within a few weeks. Meta has the money, talent, clear corporate control, and history of investing in long-payoffs (see Oculus). The company has also designed and deployed their own silicon for AI inference and training (search and ranking). Given they are entering the space relatively late, Meta's launch could make a splash and the company could offer upfront subsidies, like other labs have done in the past to get users. Plus, Meta can instantly get any product to a huge audience which seems to translate to install base (e.g. Threads). Meta plans to spend $65B in 2025, primarily to expand its AI infrastructure.

Alibaba, like DeepSeek, is currently threatening OpenAI via the open-source and pricing pressure route.

In the wake of DeepSeek and other Chinese AI developments, OpenAI is calling for bans on 'PRC-produced' models.

Microsoft cannot be ignored. They are now actively competing with their erstwhile partner - see the section on Microsoft squeezing OpenAI as a risk on the investment side.

Dominant Use Cases: Where Does OpenAI Have an Edge?

While Agents are nascent and OpenAI appears to have a first-mover advantage, Google is close behind. Microsoft and Google have large catalogs of existing tools for agents to integrate into—plus customer bases to sell to.

If a big agent market doesn't materialize, it's hard to see how OpenAI could hit continued 300% projections.

Technical Limitations: Has Progress Stalled?

OpenAI released GPT-4.5 on February 27, 2025 and xAI released Grok 3 on February 19, 2025. While impressive models and improvements on past models in certain aspects (but not all), they have reinforced a notion among many that the pre-training wall in large language models (LLMs) has arrived. GPT-4.5 was released nearly 2 years after GPT-4. It is becoming clear that frontier labs are running into problems. And while synthetic data has and will continue to help further improve models, it has its limit in terms of how far it can push performance alone.

Therefore, while models continue to achieve higher scores on benchmarks, these metrics often don't tell the whole story. Real-world performance reveals persistent weaknesses. For example, these write-ups into Claude 3.7 Sonnet's disappointing performance playing Pokemon, LLMs' continued struggles to produce truly compelling writing, and Devin.ai questionable coding practices all point to underlying limitations.

Furthermore, the apparent surge in code generation driven by LLMs is misleading. A 2024 GitClear study revealed a significant rise in code duplication and churn linked to AI code assistants. While AI boosts short-term output, it appears to negatively impact long-term code maintainability by encouraging copy-pasting and discouraging refactoring. These findings corroborate Google's DORA 2024 findings, which linked increased AI adoption to decreased "delivery stability" (defects). All of this begets the question: How Much Are LLMs Actually Boosting Real-World (Programmer) Productivity?.

And all of this underlies a feeling that the underlying intelligence of these models has been relatively stagnant since GPT-3 or 3.5, a feeling I am not alone in having.

This is perhaps why one of the key innovations moving forward are Chain-of-Thought (CoT) models. CoT models are not necessarily new. Prompting techniques to elicit deeper responses from LLMs – asking them to show their "thinking" steps, consider multiple perspectives, or break down problems – have existed for some time, in part due to its ability to reduce hallucinations. Nevertheless, the entrance of explicit CoT models has been significant to the GenAI scene, particularly when it comes to solving mathematics or programming problems.

CoT's power is undeniable. As Nouhad Ziri states, "generating thousands, hundreds of thousands, or even millions of trajectories and then scoring them... is far from human cognition. It's a brute-force approach that, while undeniably effective, is fundamentally different from human reasoning...CoT combined with RL search and reward modeling scoring is super powerful."

However, CoT faces inherent limitations. First, the computational cost of running extensive chains of thought will be a significant constraint. Second, and relatedly, model performance inherently degrades with longer sequences due to representational collapse and over-quashing. Third, and perhaps most fundamentally, it's unclear whether LLMs engage in any form of genuine formal reasoning. Research, such as Apple's "GSM-Symbolic" paper, demonstrates that models exhibit significant fragility and variability when faced with even minor question variations. This suggests a reliance on pattern-matching rather than true logical reasoning. While some models (like Llama3-8b and GPT-4o) show less performance degradation, the underlying vulnerability persists. This fragility is further highlighted in "Faith and Fate: Limits of Transformers on Compositionality," which hypothesizes that LLMs reduce complex reasoning to "linearized subgraph matching" – sophisticated pattern recognition, but not true rule-based computation. Even seemingly simple tasks, like multiplication, can expose these weaknesses, as demonstrated by models like o1-mini.

Part 3: Our Forecast

10th Percentile Scenario ($11B ARR): What if Most Things Go Wrong?

We think there is at least a 10% chance that OpenAI will be outpaced by the vast amount of competition and will lose its lead in terms of revenue. OpenAI seems to have a tense relationship with Microsoft, which appears to be taking actions to compete directly with OpenAI. Significant talent has already left OpenAI, and this could accelerate if staff began to think that OpenAI was on the decline.

Finally, it seems plausible that OpenAI could face a constraint from data center capacity that reduces its ability to meet high demand. Already we see some potential signs of this concern. Sam Altman recently warned that users should expect delayed releases due to capacity constraints.

If the above problems were to materialize it seems reasonable to imagine that OpenAI's revenue could peak in 2026 and even potentially backslide a bit by 2027. Accordingly, we forecast a 10% chance that OpenAI's ARR in mid-2027 is $11B, only slightly more than where we estimate ARR is today.

90th Percentile Scenario ($70B ARR): What if Most Things Go Right?

In Part 1 we presented a breakdown of what OpenAI's revenue trajectory might look like if everything goes right. This includes significantly higher consumer demand for ChatGPT. Even more importantly, it relies on the creation of autonomous agents that will start to replace human workers in customer service, software engineering, and R&D research. We think there is at least a 10% chance that OpenAI is the first to market with Agents that prove to be highly valuable for a variety of professions. Our 90th percentile forecast is $70B in ARR by mid-2027, which is close to the revenue breakdown presented in Part 1.

50th Percentile Scenario ($39B ARR): Our Median Forecast

What does the median scenario look like? Our best guess is that OpenAI will maintain its lead, but see growth moderating to high double digits as competition from Anthropic and others put pressure on their business. We imagine that GenAI as an industry will continue its unparalleled growth and adoption, but we do not expect to see many agents that are capable of fully replacing humans by mid-2027.

Our median forecast is that OpenAI grows to $39B ARR by mid-2027.

Final Forecast

Putting all of the above together, our final forecast is $39B ARR by mid-2027 with a 80/20 confidence interval of [$11B, $70B]

This compares to $44B projected ARR by 2027 as reported by The Information—our median is slightly more conservative.

The extraordinary width of our confidence interval—spanning nearly 6x from bottom to top—reflects the unprecedented uncertainty in this rapidly evolving market. OpenAI stands at a crossroads: the path to becoming one of the most valuable companies in history is clear, but so are the risks that could derail that trajectory.